Lightweight containerization with Tupperware

Nov 3, 2017

Never miss our publications about Open Source, big data and distributed systems, low frequency of one email every two months.

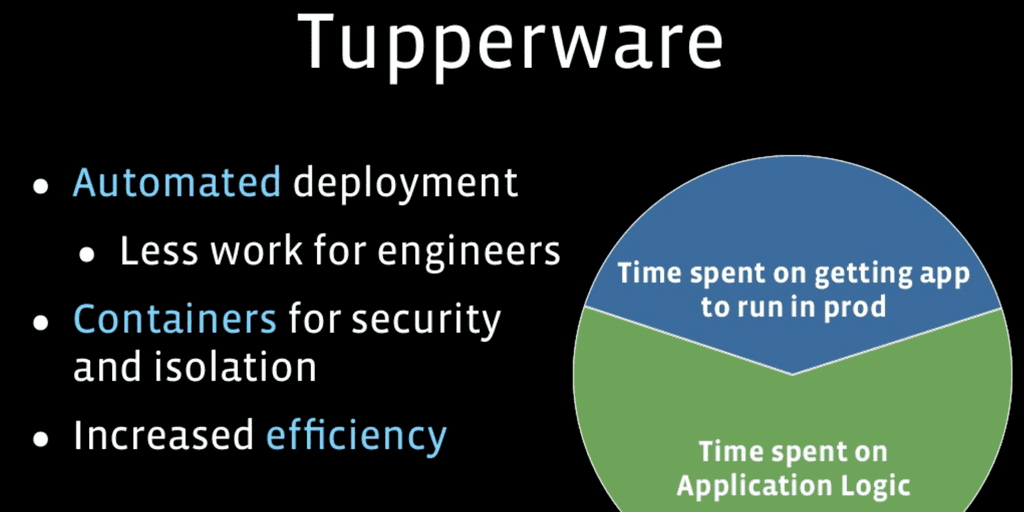

In this article, I will present lightweight containerization set up by Facebook called Tupperware.

What is Tupperware

Tupperware is a homemade framework written and used internally at Facebook. Tupperware is a container scheduler which aims at managing container-based applications and tasks. As a scheduler, it allows parallel job execution to run facebook services. It also provides runtime environments isolation and control of the resources.

Architecture

The following table compares the industry’s components to use containers versus Tupperware’s.

| Industry | |

|---|---|

| Etcd, Consul | Zookeeper based discovery |

| Kubernates, Docker Swarm , Chronos | Tupperware Scheduler |

| Docker Networking, CoreOS Flannel | Tupperware ILA |

| Containers | Containers |

| Docker Engine, RKT | Tupperware Agent |

| KVM, Hyper-V, LXC | Facebook hosts |

Facebook uses the same pattern as the industry for deploying containers. The main difference is that all engines and resources scheduling is managed by Tupperware, no Swarm, no docker engine, no KVM… Note, Docker Swarm can use zookeeper based discovery.

We can imagine that Facebook started using Tupperware several years ago and that only Zookeeper was available as a mature and battle-tested solution.

Tupperware Agents

Tupperware agents are the heart of Tupperware. They run on Facebook’s hosts and manage every layer of the running application. They are composed of:

- Task manager

- Package manager

- Volume manager

- Resource manager

- Scheduler heartbeat

Launching Containers

Every container is launched the same way. At the start, they contain a BTRFS image. They use ReadWrite Snapshots on a ReadOnly base. Every one of Facebook’s packages and other common tools are pre-installed. They allow systemd-init using nspawn. Containers also use cgroups v2.

Image layering

Every image on Tupperware is layered as follows:

- Running task

- Application image

- Facebook image

- Base OS Image

The base OS image is based on RedHat OS. It is the basic official image (Facebook contributes occasionally to bug fixes, so they are fixed and distributed in following versions officially)

The Facebook image applies Facebook’s general customisation like custom repositories, internal programs, modules (let’s think about YARN !) and network customizations to the base image.

These two layers are identical across the majority of Facebook’s running tasks.

The application image contains instructions required by the running task.

Why BTRFS

While reading this article, you might wonder why BTRFS is used for the low layer of the image. It was chosen because it provides the following features:

- Copy on write

- Subvolumes

- container can mount volumes

- easy to manage

- Snapshots (RO and RW)

- it allows going back in time easily

- Binary diffs

- lower disk space usage

- lower disk usage IO

- improved disk data caching

- independent version layers

- different update schedules for layers

- Quotas

- Use full to prevent container to take all disk space over other containers

- Cgroups IO Control

- provides resource isolation

- disk isolation

- memory isolation

- CPU isolation

Building images

Images are built using Buick build.

Buick build has been chosen for its following features:

- Declarative image building

- Fast parallel builds

- Reproducible builds

- Incremental builds

- Separation of build and runtime

- Fully self contained

- Provides true FS isolation

- Testable

Systemd init

To finish lets dive in how containers are launched with Systemd.

Systemd is container aware and allows SSH connection inside the container, which is useful for debugging or executing specific commands. It uses systemd-nspawn feature and also enables logging outside the container. Finally (but not advised), it can run containers at build time (Docker for example does not allow it).

Conclusion

To conclude, we can say that Facebook is aware of the industry practices. However, instead of relying on the industry’s current technologies for container management, they choose to develop and maintain a different stack internally. I think that this choice has been made at a time when the industry was discovering containers and did not provide production ready tools in terms of stability and features.

Facebook is not the only one having developed their home based container schedulers, Elasticsearch has also done it as well with ECE. That’s what the conference emphasized: sometimes it makes sense for companies to bootstrap and run their own solution. It’s a reasonable choice when no solution available on the market satisfies internal criterias and constrains.