Rebuilding HDP Hive: patch, test and build

Oct 6, 2020

- Categories

- Big Data

- Infrastructure

- Tags

- Maven

- Java

- Hive

- Git

- GitHub

- Release and features

- TDP

- Unit tests [more][less]

Never miss our publications about Open Source, big data and distributed systems, low frequency of one email every two months.

The Hortonworks HDP distribution will soon be deprecated in favor of Cloudera’s CDP. One of our clients wanted a new Apache Hive feature backported into HDP 2.6.0. We thought it was a good opportunity to try to manually rebuild HDP 2.6.0’s Hive version. This article will go through the process of backporting a list of patches provided by Hortonworks on top of the open source Hive project in the most automated way.

Re-building HDP 2.6.0’s Hive

Our first step was to see if we were able to build Apache Hive as it is in HDP 2.6.0.

For each HDP release, Hortonworks (now Cloudera) describes the list of patches applied on top of a given component’s release in the release notes. We will retrieve here the list of patches applied on top of Apache Hive 2.1.0 for HDP 2.6.0 and put them in a text file:

cat hdp_2_6_0_0_patches.txt

HIVE-9941

HIVE-12492

HIVE-14214

...

...

HIVE-16319

HIVE-16323

HIVE-16325We know there are 120 of them:

wc -l hdp_2_6_0_0_patches.txt

120 hdp_2_6_0_0_patches.txtNow comes the question how to apply those patches?

Applying the patches in the order provided by Hortonworks

The first step is to clone the Apache Hive repository and checkout the version HDP 2.6.0 embeds which is the 2.1.0:

git clone https://github.com/apache/hive.git

git checkout rel/release-2.1.0Our first guess was that the patches were listed on HDP’s release notes in the chronological order of merging in Hive’s master branch so we tried to get the patches and apply them in that order.

For each patch in the list, we need to fetch the patch file issued in the associated JIRA and run the git apply command against it. It can be tedious for a large amounts of patches so we will see later in this article how to automate it.

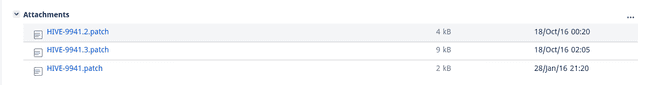

Let’s start small by trying to apply the first patch of our list: HIVE-9941. We can see in the associated JIRA issue that there are multiple attachments:

We will pick the most recent one and apply it:

wget https://issues.apache.org/jira/secure/attachment/12833864/HIVE-9941.3.patch

git apply HIVE-9941.3.patch

HIVE-9941.3.patch:17: new blank line at EOF.

+

HIVE-9941.3.patch:42: new blank line at EOF.

+

HIVE-9941.3.patch:71: new blank line at EOF.

+

HIVE-9941.3.patch:88: new blank line at EOF.

+

warning: 4 lines add whitespace errors.The git apply command gave us a few warnings about some white spaces and blank lines but the application of the patch worked. The success is confirmed with git status:

git status

HEAD detached at rel/release-2.1.0

Untracked files:

(use "git add <file>..." to include in what will be committed)

HIVE-9941.3.patch

ql/src/test/queries/clientnegative/authorization_alter_drop_ptn.q

ql/src/test/queries/clientnegative/authorization_export_ptn.q

ql/src/test/queries/clientnegative/authorization_import_ptn.q

ql/src/test/queries/clientnegative/authorization_truncate_2.q

ql/src/test/results/clientnegative/authorization_alter_drop_ptn.q.out

ql/src/test/results/clientnegative/authorization_export_ptn.q.out

ql/src/test/results/clientnegative/authorization_import_ptn.q.out

ql/src/test/results/clientnegative/authorization_truncate_2.q.out

nothing added to commit but untracked files present (use "git add" to track)In this case, the patch only created new files and did not perform any modifications but it worked regardless.

Moving on to the second patch of the list, HIVE-12492:

wget https://issues.apache.org/jira/secure/attachment/12854164/HIVE-12492.02.patch

git apply HIVE-12492.02.patch

HIVE-12492.02.patch:362: trailing whitespace.

Map 1

[...]

error: src/java/org/apache/hadoop/hive/conf/HiveConf.java: No such file or directory

error: src/test/resources/testconfiguration.properties: No such file or directory

error: src/java/org/apache/hadoop/hive/ql/optimizer/ConvertJoinMapJoin.java: No such file or directory

error: src/java/org/apache/hadoop/hive/ql/optimizer/stats/annotation/StatsRulesProcFactory.java: No such file or directory

error: src/java/org/apache/hadoop/hive/ql/stats/StatsUtils.java: No such file or directoryThis time, we encountered multiple No such file or directory errors. Tracking the cause of the error is kind of tricky. Let me show why it is so and why it make the all patching process hard to automate.

Once we download and open the “HIVE-12492.02.patch” path file, we see at the beginining that the “No such file or directory” error is applying to the file common/src/java/org/apache/hadoop/hive/conf/HiveConf.java:

diff --git common/src/java/org/apache/hadoop/hive/conf/HiveConf.java common/src/java/org/apache/hadoop/hive/conf/HiveConf.java

index 3777fa9..08422d5 100644

--- common/src/java/org/apache/hadoop/hive/conf/HiveConf.java

+++ common/src/java/org/apache/hadoop/hive/conf/HiveConf.java

@@ -1422,6 +1422,11 @@ private static void populateLlapDaemonVarsSet(Set<String> llapDaemonVarsSetLocal

"This controls how many partitions can be scanned for each partitioned table.\n" +

[...]But the error is telling that the src/java/org/apache/hadoop/hive/conf/HiveConf.java does not exist instead of common/src/java/org/apache/hadoop/hive/conf/HiveConf.java. Notice how the second path prefixes the first one with common. This is because git apply has a property -p (described here) which removes the leading part components of file paths. By default it is set to 1 and not 0, removing the first directory, common in our case.

We did not encounter this problem with the previous patch because the diff made use of prefixing in the file paths, for example:

diff --git a/ql/src/test/queries/clientnegative/authorization_alter_drop_ptn.q b/ql/src/test/queries/clientnegative/authorization_alter_drop_ptn.qLet’s try again with -p0:

git apply -p0 HIVE-12492.02.patch

HIVE-12492.02.patch:362: trailing whitespace.

Map 1

[...]

error: patch failed: common/src/java/org/apache/hadoop/hive/conf/HiveConf.java:1422

error: common/src/java/org/apache/hadoop/hive/conf/HiveConf.java: patch does not apply

error: patch failed: itests/src/test/resources/testconfiguration.properties:501

error: itests/src/test/resources/testconfiguration.properties: patch does not apply

error: patch failed: ql/src/java/org/apache/hadoop/hive/ql/optimizer/ConvertJoinMapJoin.java:53

error: ql/src/java/org/apache/hadoop/hive/ql/optimizer/ConvertJoinMapJoin.java: patch does not apply

error: patch failed: ql/src/java/org/apache/hadoop/hive/ql/optimizer/stats/annotation/StatsRulesProcFactory.java:51

error: ql/src/java/org/apache/hadoop/hive/ql/optimizer/stats/annotation/StatsRulesProcFactory.java: patch does not applyThis is better but still the patch won’t apply. This time we have an error patch does not apply which is clearly indicating us the patch can not be applied.

Looking back at the JIRA list given by Hortonworks, we realized that they actually were given in an alphanumerical order. If we take the example of these two issues: HIVE-14405 and HIVE-14432. The first one has been created before the second one but the patch from the later has been release before the former. If these two patches were to modify the same file, it could lead to the error we just encountered.

Applying the patches in the alphanumerical order seems like a bad choice in this case. We will now order the patches in chronological order according to the date when they were committed/applied in the master branch.

Applying the JIRA patches in the commit order

Let’s try to iterate through every patch to see how many patches we can apply by going with this strategy.

Getting and applying every patches file automatically from every JIRA’s attachments can be tedious:

- There are 120 of them

- We have just seen some patches are applied at

-p0level while others are at-p1 - Some JIRA indicated by Hortonworks do not have any attachments (ex: HIVE-16238)

- Some JIRA have attachments that are not patches (ex: HIVE-16323 has a screenshot attached next to the patches)

- Some JIRA have patches in

.txtformat instead of.patch(ex: HIVE-15991)

Here is an excerpt (in CoffeeScript) from the script we made to get the latest version of each patch from JIRA:

generate_patch_manifest = ->

# For each patch in the given list, return a manifest

patches_manifest = hdp_2_6_0_0_patches

# Generate a manifest with patch id, description, creation date and url

.map (patch) ->

# Parse the JIRA id

jira_id = patch.split(/:(.+)/)[0]

# Parse the commit description

commit_description = patch.split(/:(.+)/)[1]

# Get all the attachments

response = await axios.get "https://issues.apache.org/jira/rest/api/2/search?jql=key=#{jira_id}&fields=attachment"

# Filter the attachments that are actual patches

attachments = response.data.issues[0].fields.attachment.filter (o) -> o.filename.includes jira_id

# Get only the latest patch

most_recent_created_time = Math.max.apply Math, attachments.map (o) -> Date.parse o.created

most_recent_created_attachment = attachments.filter (o) -> Date.parse(o.created) is most_recent_created_time

# Create the patch manifest (implicit return in CoffeeScript)

id: jira_id

description: commit_description

created: Date.parse most_recent_created_attachment[0].created

url: most_recent_created_attachment[0].content

# Order the patches by date

.sort (a,b) ->

a.created - b.created

# Write tbe object to a YAML file

fs.writeFile './patch_manifest.yml', yaml.safeDump(patches_manifest), (err) ->

if err

then console.error err

else console.info 'done'This method generates a patch_manifest.yaml file that looks like:

- id: $JIRA_NUMBER

description: $COMMIT_MESSAGE

created: $TIMESTAMP

url: $LATEST_PATCH_URLCreating this manifest is going to help us applying the patches in right order.

Once we have built this manifest, we iterate through the list, download and try to apply as much patches as we can. Here is another code excerpt to do that:

# For each patch in the manifest

for patch, index in patches_manifest

try

await nikita.execute """

# Download the patch file

wget -q -N #{patch.url}

# If the patch as the "a/" leading part in the files, apply with -p1

if grep "git \\\\ba/" patches/#{patch.url.split('/').pop()}; then

git apply patches/#{patch.url.split('/').pop()}

# If not, then apply with -p0

else

git apply -p0 patches/#{patch.url.split('/').pop()}

fi

# Commit changes

git add -A

git commit -m "Applied #{patch.url.split('/').pop()}"

"""

console.info "Patch #{patch.url.split('/').pop()} applied successfully"

catch err

console.error "Patch #{patch.url.split('/').pop()} failed to apply"This did not go as good as expected. Only 19 out of the 120 patches in the list applied successfully. All failures were due to patch does not apply errors which could be because:

- We are still not applying the fixes in the right order

- We are missing some code pre-requisites (ie: the JIRA list is incomplete)

- Some code need to be modified to be backported

- All of the above

In the next part, we will use another strategy by cherry-picking the commits related to the HIVE-XXXXX JIRAs we want to have in our build.

Applying the patch by pushed-to-master chronological date

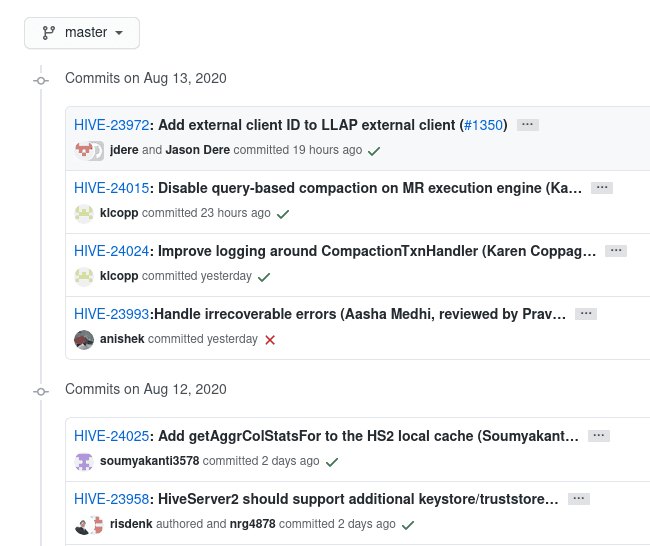

Every commit message in Apache Hive has the related JIRA issue in its description:

In this attempt, we are going to get the list of all the commits pushed to the Apache Hive master branch in order to generate the list of the commits containing the patches we want to apply in a chronological order.

git checkout master

git log --pretty=format:'%at;%H;%s' > apache_hive_master_commits.csvThe git log command with the right parameters generates a CSV file with the format: timestamp;commit_hash;commit_message.

For example:

head apache_hive_master_commits.csv

1597212601;a430ac31441ad2d6a03dd24e3141f42c79e022f4;HIVE-23995:Don't set location for managed tables in case of replication (Aasha Medhi, reviewed by Pravin Kumar Sinha)

1597206125;ef47b9eb288dec6e6a014dd5e52accdf0ac3771f;HIVE-24030: Upgrade ORC to 1.5.10 (#1393)

1597144690;d4af3840f89408edea886a28ee4ae7d79a6f16f8;HIVE-24014: Need to delete DumpDirectoryCleanerTask (Arko Sharma, reviewed by Aasha Medhi)

1597135126;71c9af7b8ead470520a6c3a4848be9c67eb80f10;HIVE-24001: Don't cache MapWork in tez/ObjectCache during query-based compaction (Karen Coppage, reviewed by Marta Kuczora)

1597108110;af911c9fe0ff3bb49d80f6c0109d30f5e1046849;HIVE-23996: Remove unused line in UDFArgumentException (Guo Philipse reviewed by Peter Vary, Jesus Camacho Rodriguez)

1597107811;17ee33f4f4d8dc9437d1d3a47663a635d2e47b58;HIVE-23997: Some logs in ConstantPropagateProcFactory are not straightforward (Zhihua Deng, reviewed by Jesus Camacho Rodriguez)

1596833809;5d9a5cf5a36c1d704d2671eb57547ea50249f28b;HIVE-24011: Flaky test AsyncResponseHandlerTest ( Mustafa Iman via Ashutosh Chauhan)

1596762366;9fc8da6f32b68006c222ccfd038719fc89ff8550;HIVE-24004: Improve performance for filter hook for superuser path(Sam An, reviewed by Naveen Gangam)

1595466890;7293956fc2f13ccbe72825b67ce1b53dce536359;HIVE-23901: Overhead of Logger in ColumnStatsMerger damage the performance

1593631609;4457c3ec9360650be021ea84ed1d5d0f007d8308;HIVE-22934 Hive server interactive log counters to error stream ( Ramesh Kumar via Ashutosh Chauhan)This next script will match only the commits we want to apply by matching the JIRA ids provided by Hortonworks. Then we order the CSV by date from oldest to newest:

cat hdp_2_6_0_0_patches.txt | while read in; do grep $in apache_hive_master_commits.csv; done > commits_to_apply.csv

sort commits_to_apply.csv > sorted_commits_to_apply.csvNow that we have an ordered list of commits we want to apply we can go back to the 2.1.0 release tag and cherry-pick these commits.

Cherry-picking seems like a better solution than patch applying in our situation. Indeed, we have just seen that we could not apply some patches because of missing code dependency.

Let’s try with the first one, the JIRA is HIVE-14214 and the commit hash is b28ec7fdd8317b47973c6c8f7cdfe805dc20a806:

head -1 sorted_commits_to_apply.csv

1469348583;b28ec7fdd8317b47973c6c8f7cdfe805dc20a806;HIVE-14214: ORC Schema Evolution and Predicate Push Down do not work together (no rows returned) (Matt McCline, reviewed by Prasanth Jayachandran/Owen O'Malley)

git cherry-pick -x 15dd7f19bc49ee7017fa5bdd65f9b4ec4dd019d2 --strategy-option theirs

CONFLICT (modify/delete): ql/src/test/results/clientpositive/tez/explainanalyze_5.q.out deleted in HEAD and modified in 15dd7f19bc... HIVE-15084: Flaky test: TestMiniTezCliDriver:explainanalyze_1, 2, 3, 4, 5 (Pengcheng Xiong). Version 15dd7f19bc... HIVE-15084: Flaky test: TestMiniTezCliDriver:explainanalyze_1, 2, 3, 4, 5 (Pengcheng Xiong) of ql/src/test/results/clientpositive/tez/explainanalyze_5.q.out left in tree.

[...]

CONFLICT (modify/delete): ql/src/test/queries/clientpositive/explainanalyze_1.q deleted in HEAD and modified in 15dd7f19bc... HIVE-15084: Flaky test: TestMiniTezCliDriver:explainanalyze_1, 2, 3, 4, 5 (Pengcheng Xiong). Version 15dd7f19bc... HIVE-15084: Flaky test: TestMiniTezCliDriver:explainanalyze_1, 2, 3, 4, 5 (Pengcheng Xiong) of ql/src/test/queries/clientpositive/explainanalyze_1.q left in tree.

error: could not apply 15dd7f19bc... HIVE-15084: Flaky test: TestMiniTezCliDriver:explainanalyze_1, 2, 3, 4, 5 (Pengcheng Xiong)We removed some of the outputs but the takeaway here is that we are having some conflicts. git cherry-pick is trying to git merge a commit from the official Hive repository into our current local branch. The benefit of cherry-picking is that we are comparing two file instead of just applying a diff, leaving us the choice on how to merge the content.

To do that, we need to add the --strategy-option theirs parameter to the cherry-pick command. This will solve most conflicts but we can still encounter conflicts like:

Unmerged paths:

(use "git add/rm ..." as appropriate to mark resolution)

deleted by us: llap-server/src/java/org/apache/hadoop/hive/llap/io/encoded/SerDeEncodedDataReader.java

added by them: llap-server/src/java/org/apache/hadoop/hive/llap/io/encoded/VectorDeserializeOrcWriter.java The conflict resolution must be done manually with git add or git rm depending on the situation.

Using the cherry-picking method and the manual resolutions for some patches, we were able to import all 120 JIRA patches in our code branch.

Important note: We tried to automate it as much as possible but keep in mind that running the unit tests after patching is extremely recommended before building and applying the fix to any production cluster. We will see that in the next part.

git checkout rel/release-2.1.0

while read line; do

commit=$(echo $line | awk -F "\"*;\"*" '{print $2}')

if git cherry-pick -x $commit --strategy-option theirs > patches/logs/cherry_pick_$commit.out 2>&1; then

echo "Cherry picking $commit SUCCEEDED"

else

git status > patches/logs/cherry_pick_$commit.out 2>&1

git status | sed -n 's/.* by .*://p' | xargs git add

git -c core.editor=true cherry-pick --continue

echo "Cherry picking $commit SUCEEDED with complications"

fi

done < sorted_commits_to_apply.csvWith the script above, we are able to apply 100% of the patches. In the next part, we will go over the steps to build and test the Hive distribution.

Compilation

Before running the unit tests, let’s try to see if we can actually build the Hive release:

mvn clean package -Pdist -DskipTestsAfter a few minutes, the build stops with the following error:

[ERROR] /home/leo/Apache/hive/storage-api/src/test/org/apache/hadoop/hive/ql/exec/vector/TestStructColumnVector.java:[106,5] cannot find symbol

symbol: class Timestamp

location: class org.apache.hadoop.hive.ql.exec.vector.TestStructColumnVectorLooking at the file ./storage-api/src/test/org/apache/hadoop/hive/ql/exec/vector/TestStructColumnVector.java, the class java.sql.Timestamp, is not imported indeed.

This file was modified by cherry-picking the HIVE-16245. This patch changes 3 files:

- ql/src/java/org/apache/hadoop/hive/ql/optimizer/physical/Vectorizer.java

- storage-api/src/java/org/apache/hadoop/hive/ql/exec/vector/VectorizedRowBatch.java

- storage-api/src/test/org/apache/hadoop/hive/ql/exec/vector/TestStructColumnVector.java

How did Hortonworks apply this specific patch without breaking the build? In the next part, we will dig into the Hortonworks’ public Hive repository to find clues on how they applied the patches listed for HDP 2.6.0.0.

Exploring Hortonworks’ hive2-release project

On GitHub, Hortonworks keeps a public repository for every component in the HDP stack. The one we will want to check is [hive2-release](http://web.archive.org/web/20200906030301/http://web.archive.org/web/20200906030301/https://github.com/hortonworks/hive2-release//):

git clone http://web.archive.org/web/20200906030301/http://web.archive.org/web/20200906030301/https://github.com/hortonworks/hive2-release//.git

cd hive2-release && ls

README.mdThe repository seems empty at first but the files can actually be found by checking out the tags. There is one tag per HDP release.

git checkout HDP-2.6.0.3-8-tagLet’s try to find out how Hortonworks applied the patch which provoked the build error we have seen earlier.

git log --pretty=format:'%at;%H;%s' | grep HIVE-16245

1489790314;2a9150604edfbc2f44eb57104b4be6ac3ed084c7;BUG-77383 backport HIVE-16245: Vectorization: Does not handle non-column key expressions in MERGEPARTIAL modeNow, let’s see which files were modified by this commit:

git diff 2a9150604edfbc2f44eb57104b4be6ac3ed084c7^ | grep diff

diff --git a/ql/src/java/org/apache/hadoop/hive/ql/optimizer/physical/Vectorizer.java b/ql/src/java/org/apache/hadoop/hive/ql/optimizer/physical/Vectorizer.java

diff --git a/storage-api/src/java/org/apache/hadoop/hive/ql/exec/vector/VectorizedRowBatch.java b/storage-api/src/java/org/apache/hadoop/hive/ql/exec/vector/VectorizedRowBatch.javaAs opposed to what’s in the patch (and been pushed to Apache Hive’s master branch), the backport operated by Hortonworks only changes 2 out of 3 files. There seems to be some black magic involved or the process is manual and not fully documented. The files that provoked the build error we encountered earlier is not changed here.

Conclusion

Backporting a list of patches to an open source project like Apache Hive is hard to automate. With the HDP distribution and depending on the patches, Hortonworks has applied some manual changes to the source code or its engineers were working on a forked version of Hive. A good understanding of the global project structure (modules, unit tests, etc.) and its ecosystem (dependencies) is required in order to build a functional and tested release.