Hadoop cluster takeover with Apache Ambari

Nov 15, 2018

Never miss our publications about Open Source, big data and distributed systems, low frequency of one email every two months.

We recently migrated a large production Hadoop cluster from a “manual” automated install to Apache Ambari, we called this the Ambari Takeover. This is a risky process and we will detail why this operation was required and how we did it.

Context

Back in 2012, one of our clients at Adaltas wanted to deploy a fully qualified on-prem Apache Hadoop cluster for production use cases. At this time, the technical specifications provided by our client were highly security oriented. The cluster should have HA services, Kerberos authentication, SSL secured communications, etc. Given some features at that time, it was decided that the distribution would be Hortonworks HDP. In early 2013 the Ambari project had just graduated from the Apache Incubator program. The tool was not the same as today. So we chose to deploy the cluster without Apache Ambari. This is when we created the Ryba project, which I will introduce next in the article.

Since 2013 the cluster has evolved: nodes were added, the HDP stack has been through several upgrades. When our client changed his strategy for cluster administration, we realized that Ryba was not the right tool anymore. The cluster’s services and configurations should be managed by Ambari.

And so the ‘Ambari takeover’ project was started.

Ryba

Ryba is an open source project. Its main job is to bootstrap fully secured and functionnal Hadoop clusters. Ryba also serves as a configuration manager tool for all the services. It is able to deploy HDFS, Kerberos, Hive, HBase, etc. It is written in Node.js and more particularly in CoffeeScript, made for an easy to read code.

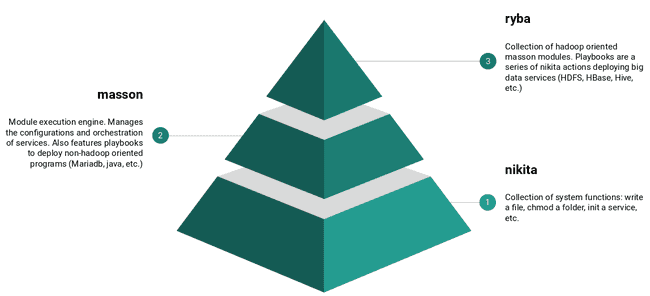

Ryba is based on two other projects also built at Adaltas: Masson and Nikita.

The first layer is Nikita: it is a collection of wrapped system functions. That lets you do anything you want on your localhost or on a distant server via SSH. Nikita actions are really diverse: create a directory, render a file, initiate a systemd service, change iptables rules, etc.

These actions are used in Ryba which can be seen as a collection of Hadoop-oriented playbooks of Nikita actions.

Finally there is Masson: a module execution engine. Masson will be used to deal with the dependencies and share of configurations between the services. For example if you deploy HDFS Namenode using Ryba, Masson will remind you that you also need the Journalnode service (by reading dependencies from HDFS Namenode playbook). It will be able to read from the KDC configuration to create the adequate keytabs.

Here is a simplified example of a Ryba module:

# HBase Master Install

module.exports = header: 'HBase Master Install', handler: ({options}) ->

# Creates IPTables rules on the node where the HBase Master service is deployed

@tools.iptables

header: 'IPTables'

if: options.iptables

rules: [

{ chain: 'INPUT', jump: 'ACCEPT', dport: options.hbase_site['hbase.master.port'], protocol: 'tcp', state: 'NEW', comment: "HBase Master" }

{ chain: 'INPUT', jump: 'ACCEPT', dport: options.hbase_site['hbase.master.info.port'], protocol: 'tcp', state: 'NEW', comment: "HMaster Info Web UI" }

]

# Creates the user and group for 'hbase'

@system.group header: 'Group', options.group

@system.user header: 'User', options.user

# Creates necessary directories on the destination node

@call header: 'Layout', ->

@system.mkdir

target: options.pid_dir

uid: options.user.name

gid: options.group.name

mode: 0o0755

@system.mkdir

target: options.log_dir

uid: options.user.name

gid: options.group.name

mode: 0o0755

@system.mkdir

target: options.conf_dir

uid: options.user.name

gid: options.group.name

mode: 0o0755

# Installs the HBase master package

@call header: 'Service', ->

@service

name: 'hbase-master'

# Initiates a systemd script to operate the service

@service.init

header: 'Systemd Script'

target: '/usr/lib/systemd/system/hbase-master.service'

source: "#{__dirname}/../resources/hbase-master-systemd.j2"

local: true

context: options: options

mode: 0o0640This deploys the HBase Master service on a given node, full code source can be checked out here. Every @ is a Nikita action: adding Iptables rules, creating a directory, initiate a systemd service, etc.

Ambari takeover

The challenge here was to give Apache Ambari full knowledge of our clusters’ topologies (which services are running and where they are running) as well as our configurations (number of max containers on YARN, heapsize of the HBase Regionservers, etc.). The ‘ryba-ambari-takeover’ started with a fork from Ryba. This way, we could ensure that the custom configurations of the cluster would be rendered the same way as it was with Ryba.

Before starting to takeover Hadoop services, we need to start install Ambari Server and Ambari Agents as well as creating an empty cluster with no services. This is done through the API as the WebUI does not allow you to do this. In the process we automated the cluster creation, node registration, etc.

The main concept of the project is the following:

For each services deployed on the cluster (HDFS, HBase, Hive, etc.) and manageable by Ambari:

- Set the service as installed.

- Declare the component on a given node.

- Upload the configurations for the given the component.

- Stop the service on the node.

- Delete the systemd or initd script on the node so that we are sure the service is ran by Ambari.

- Start the service on Ambari.

Again, everything was done using Ambari’s API.

Here is a step-by-step example with the one service to rule them all: Apache Zookeeper.

In this test cluster, the Zookeper quorum consists of three nodes. We will ‘takeover’ them one by one to ensure quorum availability.

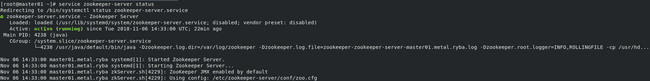

As we can see here the service is already installed and is running correctly on our ‘master01’ node:

Now can start to ‘takeover’ the Zookeeper service to put it in Ambari. Using the API, we will enable the Zookeeper component on the cluster, install it on the node (which is going to be quick as the packages are already on the machine) and upload its configurations. Before restarting the service with Ambari, we will stop it and delete the systemd script to be sure it is really Ambari which manages the process.

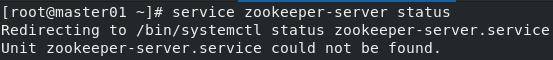

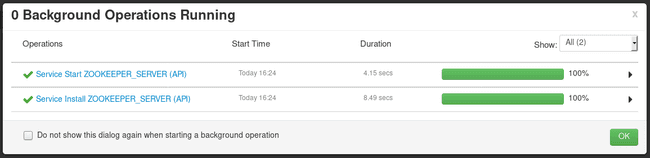

Then we will call the Ambari API to start the service:

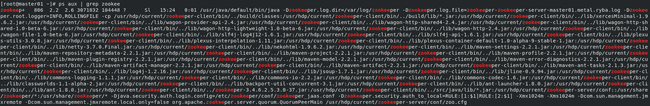

And finally we can check on our node that the Zookeeper process is running properly:

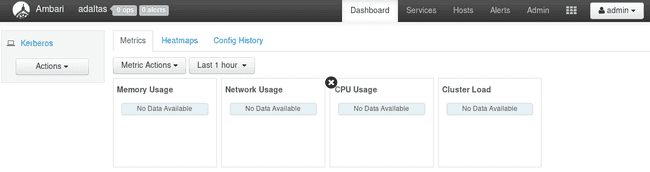

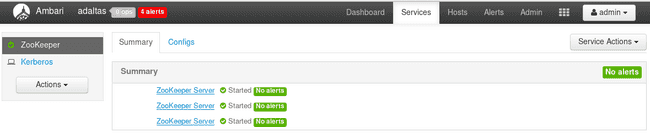

Now that the first Zookeeper node is done, we can proceed with the other two. Here is the result once all three Zookeepers have been ‘takeovered’:

Each Hadoop service with HA capability enabled can be “takeovered” this way with no downtime for the end user. This was really important in the process as multiple production use cases are running on the cluster 24/7.

From the beginning of the project, the philosophy while building Ryba was to automate as many things as possible: generate truststore, keytabs, etc. Since our production cluster was already deployed with Ryba, we are still going to deal with these manually. While we give full power to Ambari over the topology and the configurations, we are still dealing with the SSL keystores and truststores as well as Kerberos’ keytabs in Ryba.

This way the ryba-ambari-takeover project can also be used to bootstrap a Ambari cluster and deploy services on it from bare metal. Every needed action to implement complex configurations (SSL, Kerberos, etc.) are still done as they were in Ryba. The only difference will be that the services will be managed by Ambari and not systemd.

Technical difficulties

The most important challenge while migrating our clusters to Ambari was the lack of clear documentation of the Ambari API. Most of the API we used were discovered and dissected using Firefox’s network call tools. The documentation rewriten is one of the things HortonWorks is working on with Ambari 2.7/HDP 3.X.

An also notable complexity in this operation was the absence of visibility regarding some configurations in Ambari. When you change some properties in the WebUI that conflicts with some other, a popup will inform you of that. Using the API some properties, if not explicitly defined and uploaded to Ambari, will get code-generated default values.

For example the MapReduce property mapreduce.job.queuename, if not specified, will be rendered by Ambari here by return leaf_queues.pop(). When installing a new cluster, it would return default as it is the only queue but in our case we already had queues defined. We spent a lot of time wondering why some users were now positioned on the last alphabetically ranked queue of the cluster, while using Apache Sqoop (ie: MapReduce).

Conclusion

While very complicated, migrating an already installed Hadoop cluster to Ambari is feasible. The main focus is service availability and configurations consistency before and post takeover. There are thousands and thousands of configurations across all the services of a cluster so be sure to avoid forgetting a property!

Note

The ryba-ambari-takeover project was entirely written by Lucas Bakalian.