Data Lake ingestion best practices

By David WORMS

Jun 18, 2018

Never miss our publications about Open Source, big data and distributed systems, low frequency of one email every two months.

Creating a Data Lake requires rigor and experience. Here are some good practices around data ingestion both for batch and stream architectures that we recommend and implement with our customers.

Objectives

A Data Lake in production represents a lot of jobs, often too few engineers and a huge amount of work. There is therefore a need to:

- Improve productivity

Writing new treatments and new features should be enjoyable and results should be obtained quickly. - Facilitate maintenance

It must be easy to update a job that is already running when a new feature needs to be added. - Ease of operation

The job must be stable and predictive, nobody wants to be woken at night for a job that has problems.

Pattern and principles

In order to improve productivity, it is necessary to facilitate collaboration between teams. This involves implementing practice patterns to promote the development of reusable and shareable code between teams, as well as the design of elementary bricks on which we can build more complex systems. These bricks are either APIs in a Web-Service approach, or collaborative programming libraries.

These patterns must of course be in line with strategic decisions, but also:

- Be dictated by real and concrete use cases

- Not to be limited to a single technology

- Not rely on a fixed list of qualified components

Big Data is constantly evolving. Batch processing is very different today, compared to 5 years ago, and is currently slowly maturing. Conversely, stream processing is undergoing transformation and concentrates most of the innovation. For these reasons, Big Data architectures have to evolve over time.

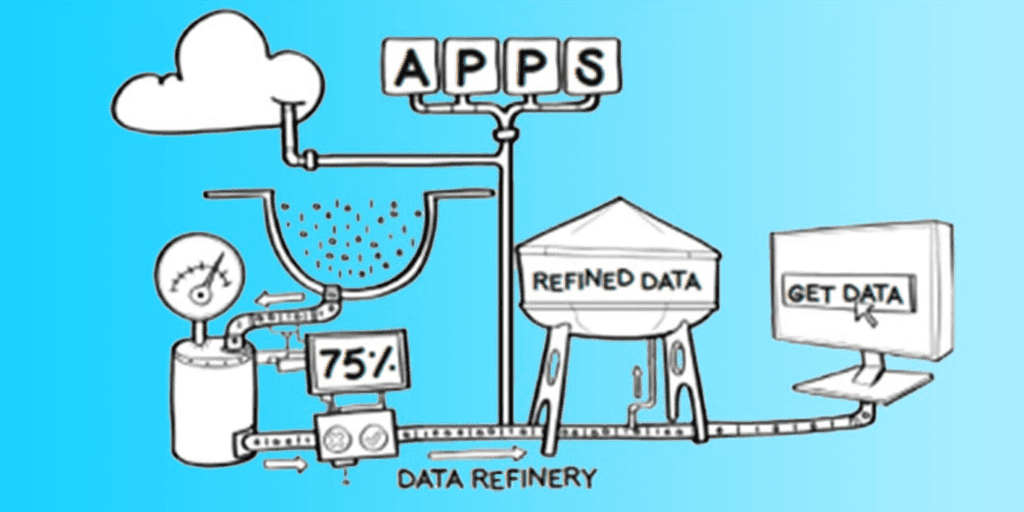

Decoupling data ingestion

Data ingestion must be independent from processing systems. For example:

- An ingestion chain rely on NiFi or written in Spark.

- It feeds a Hive warehouse for persistent storage.

- Processing queries are written in HQL and in Spark.

Managing incidents

Jobs must be developed with unit testing and maximum integration coverage. However, the risk of a workflow failing is never zero. Debugging in production is not easy. The root cause can come from a change in the data, a condition in a code that has never been qualified or observed, or a change in the cluster.

Analyzing the root cause requires crucial abilities. On the one hand, the speaker must be able to quickly perform ad hoc or analytical queries on the input, intermediate or final dataset. If the workflow worked the day before, then it must be able to replay it on these data in order to be able to discriminate the data or conversely the infrastructure. Note, this implies storing the raw dataset on the cluster.

Governance schema

Here are examples of the questions raised after the management of schemas:

- What does your data mean?

- Can it be joined with another (“FrankenData”)?

- What are the attributes in common between two datasets?

- Does the evolution of the schema lead to disruptions?

Selecting a common format

It is important to communicate with a common format. It is quite acceptable to have multiple formats for the same set of data, either for simplicity or for performance purposes, but it is necessary to be able to rely on a format that everyone understands. The rare exceptions are due to very large volumetries that require the selection of a single instance of the dataset in the most optimized format possible.

In the world of data, the two most common exchange formats are Avro and Protocol Buffer. In the Big Data ecosystem, the most common storage formats compatible with an interchange format are Avro, SequenceFile, and JSON.

Avro is the most popular format and offers the following features:

- Rich and flexible data structure.

- Compact, fast and binary format.

- File with a container space for persistent data storage.

- Self-described.

- Smooth schema evolution, resolution and conflict detection.

- Reflect Datum Reader for generation from Java classes of schemas and protocols.

- Flexible and adapted to batch datasets and streaming messages.

Avro is a file format suitable for information exchange. It can be supplemented by a copy in a format adapted to certain frameworks like ORC which reaches a stronger compression ratio and better performances in Hive.

The format must be usable for both batch and stream architectures.

Sharing the schema

The schema does not stop with the Data Lake and must be accessible and shared by all stakeholders. Structured data must be associated with a schema present in an official registry. There must be a central location storing, referencing and serving the schema used across the Data Lake.

The schema can carry additional information for example to facilitate mapping to JSON or a database.

The use of databases that do not impose a strict schema does not mean that a schema does not apply to the data. For example, much of the data stored in ElasticSearch or HBase is structured in nature.

The publication of the schema and the interconvertibility between Avro and JSON allows several populations to speak to the same API. Thus, the data modeler speaks of the same fields as the data engineer and the frontend developer. Everyone speak the same language which improves communication within the company.There must be a central location storing, referencing and serving the schema used across the Data Lake.

In the latest HDP and HDF distributions, Hortonworks Schema Registry is a shared schema repository that allows applications to flexibly interact with each other to back up or retrieve schemas for the data they need to access. Having a common schema registry provides end-to-end data governance by providing a reusable schema, defining schema relationships, and enabling data providers and consumers to evolve at a different speed.

Evolution of schemas

Changes applied to the schemas must be propagated continuously. Avro supports backward and forward evolutions. Thanks to backward compatibility, a new schema can be applied to read data created using previous schemas and with forward compatibility, an older schema can be applied to read data created using newer schemas. It is useful when the schema evolves. Applications may not be updated immediately and should always read data in a new schema without benefiting from new features.

Avro’s support for schema evolution means that consumers are not impacted by an evolution and can continue to consume the data. In case of unresolved evolution, the ingestion chain must be interrupted and a manual solution put in place.

The ingestion process must be generic and rely on the definition of the schemas. This implies that schema evolutions are automatically propagated to ingestion.

Publications and notifications

Ingestion is a set of coordinated and sequenced processes. A notification system is required to inform other applications of data publishing in the Data Lake (HDFS, Hive, HBase, …) and to trigger other actions.

For example, a consumer application issues a request requesting data with a certain status, today’s date, and receives a notification as soon as the data is available.

The scheduling of applications then gains in flexibility and responsiveness. The treatment is triggered once the data is qualified and consumable, and does not require the scheduling of recovery procedures in case of delay in the ingestion phase.

All flows, consumers and publishers constitute a mapping of the flow of exchange and enhances traceability.

Conclusion

This article is not intended to be an exhaustive list of good practices but covers many useful aspects. Topics covered include incident management, selection of a common format, sharing and schema evolutions, and publication of ingested data.