Data Engineering

Data is the energy that feeds digital transformation. The developers consume it in their applications. Data Analysts search, query and share it. Data Scientists feed their algorithms with it. Data Engineers are responsible for setting up the value chain that includes the collection, cleaning, enrichment and provision of data.

Manage scalability, ensure data security and integrity, be fault-tolerant, manipulate batch or streaming data, validate schemas, publish APIs, select formats, models and databases appropriate for their exhibitions are the prerogatives of the Data Engineer. From his work derives the trust and success of those who consume and exploit the data.

Articles related to Data Engineering

CDP partie 6 : cas d'usage bout en bout d'un Data Lakehouse avec CDP

Categories: Big Data, Data Engineering, Formation | Tags: Business Intelligence, Data Engineering, Iceberg, NiFi, Spark, Big Data, Cloudera, CDP, Analyse de données, Data Lake, Entrepôt de données (Data Warehouse)

Dans cet exercice pratique, nous montrons comment construire une solution big data complète avec la Cloudera Data Platform (CDP) Public Cloud, en se basant sur l’infrastructure qui a été déployée tout…

Jul 24, 2023

CDP partie 1 : introduction à l'architecture Data Lakehouse avec CDP

Categories: Cloud computing, Data Engineering, Infrastructure | Tags: Data Engineering, Iceberg, AWS, Azure, Big Data, Cloud, Cloudera, CDP, Cloudera Manager, Entrepôt de données (Data Warehouse)

Cloudera Data Platform (CDP) est une data platform hybride pour l’intégration de donnée, le machine learning et l’analyse de la data. Dans cette série d’articles nous allons décrire comment installer…

By BAUM Stephan

Jun 8, 2023

Déploiement de Keycloak sur EC2

Categories: Cloud computing, Data Engineering, Infrastructure | Tags: EC2, sécurité, Authentification, AWS, Docker, Keycloak, SSL/TLS, SSO

Pourquoi utiliser Keycloak Keycloak est un fournisseur d’identité open source (IdP) utilisant l’authentification unique SSO. Un IdP est un outil permettant de créer, de maintenir et de gérer les…

By BAUM Stephan

Mar 14, 2023

Stage infrastructure big data

Categories: Big Data, Data Engineering, DevOps & SRE, Infrastructure | Tags: Infrastructure, Hadoop, Big Data, Cluster, Internship, Kubernetes, TDP

Présentation de l’offre Le Big Data et l’informatique distribuée sont au cœur d’Adaltas. Nous accompagnons nos partenaires dans le déploiement, la maintenance, l’optimisation et nouvellement le…

By BAUM Stephan

Dec 2, 2022

Comparaison des architectures de base de données : data warehouse, data lake and data lakehouse

Categories: Big Data, Data Engineering | Tags: Gouvernance des données, Infrastructure, Iceberg, Parquet, Spark, Data Lake, Lakehouse, Entrepôt de données (Data Warehouse), Format de fichier

Les architectures de base de données ont fait l’objet d’une innovation constante, évoluant avec l’apparition de nouveaux cas d’utilisation, de contraintes techniques et d’exigences. Parmi les trois…

May 17, 2022

Collecte de logs Databricks vers Azure Monitor à l'échelle d'un workspace

Categories: Cloud computing, Data Engineering, Adaltas Summit 2021 | Tags: Métriques, Supervision, Spark, Azure, Databricks, Log4j

Databricks est une plateforme optimisée d’analyse de donn�ées, basée sur Apache Spark. La surveillance de la plateforme Databricks est cruciale pour garantir la qualité des données, les performances du…

By PLAYE Claire

May 10, 2022

Présentation de Cloudera Data Platform (CDP)

Categories: Big Data, Cloud computing, Data Engineering | Tags: SDX, Big Data, Cloud, Cloudera, CDP, CDH, Analyse de données, Data Hub, Data Lake, Lakehouse, Entrepôt de données (Data Warehouse)

Cloudera Data Platform (CDP) est une plateforme de cloud computing pour les entreprises. CDP fournit des outils intégrés et multifonctionnels en libre-service afin d’analyser et de centraliser les…

Jul 19, 2021

Guide d'apprentissage pour vous former au Big Data & à L'IA avec la plateforme Databricks

Categories: Data Engineering, Formation | Tags: Cloud, Data Lake, Databricks, Delta Lake, MLflow

Databricks Academy propose un programme de cours sur le Big Data, contenant 71 modules, que vous pouvez suivre à votre rythme et selon vos besoins. Il vous en coûtera 2000 $ US pour un accès illimité…

May 26, 2021

Les certifications Microsoft Azure associées aux données

Categories: Cloud computing, Data Engineering | Tags: Gouvernance des données, Azure, Data Science

Microsoft Azure a des parcours de certification pour de nombreux postes techniques tels que développeur, Data Engineers, Data Scientists et architect solution, entre autres. Chacune de ces…

Apr 14, 2021

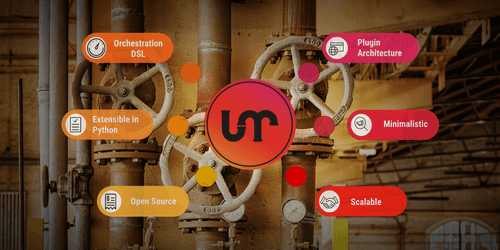

Apache Liminal, quand le MLOps rencontre le GitOps

Categories: Big Data, Orchestration de conteneurs, Data Engineering, Data Science, Tech Radar | Tags: Data Engineering, CI/CD, Data Science, Deep Learning, Déploiement, Docker, GitOps, Kubernetes, Machine Learning, MLOps, Open source, Python, TensorFlow

Apache Liminal propose une solution clés en main permettant de déployer un pipeline de Machine Learning. C’est un projet open-source, qui centralise l’ensemble des étapes nécessaires à l’entrainement…

Mar 31, 2021

Espace de stockage et temps de génération des formats de fichiers

Categories: Data Engineering, Data Science | Tags: Avro, HDFS, Hive, ORC, Parquet, Big Data, Data Lake, Format de fichier, JavaScript Object Notation (JSON)

Le choix d’un format de fichier approprié est essentiel, que les données soient en transit ou soient stockées. Chaque format de fichier a ses avantages et ses inconvénients. Nous les avons couverts…

Mar 22, 2021

TensorFlow Extended (TFX) : les composants et leurs fonctionnalités

Categories: Big Data, Data Engineering, Data Science, Formation | Tags: Beam, Data Engineering, Pipeline, CI/CD, Data Science, Deep Learning, Déploiement, Machine Learning, MLOps, Open source, Python, TensorFlow

La mise en production des modèles de Machine Learning (ML) et de Deep Learning (DL) est une tâche difficile. Il est reconnu qu’elle est plus sujette à l’échec et plus longue que la modélisation…

Mar 5, 2021

Connexion à ADLS Gen2 depuis Hadoop (HDP) et NiFi (HDF)

Categories: Big Data, Cloud computing, Data Engineering | Tags: Hadoop, HDFS, NiFi, Authentification, Autorisation, Azure, Azure Data Lake Storage (ADLS), OAuth2

Alors que les projets Data construits sur le cloud deviennent de plus en plus répandus, un cas d’utilisation courant consiste à interagir avec le stockage cloud à partir d’une plateforme Big Data on…

Nov 5, 2020

Suivi d'expériences avec MLflow sur Databricks Community Edition

Categories: Data Engineering, Data Science, Formation | Tags: Spark, Databricks, Deep Learning, Delta Lake, Machine Learning, MLflow, Notebook, Python, Scikit-learn

Introduction au Databricks Community Edition et MLflow Chaque jour, le nombre d’outils permettant aux Data Scientists de créer des modèles plus rapidement augmente. Par conséquent, la nécessité de…

Sep 10, 2020

Téléchargement de jeux de données dans HDFS et Hive

Categories: Big Data, Data Engineering | Tags: Business Intelligence, Data Engineering, Data structures, Base de données, Hadoop, HDFS, Hive, Big Data, Analyse de données, Data Lake, Lakehouse, Entrepôt de données (Data Warehouse)

Introduction De nos jours, l’analyse de larges quantités de données devient de plus en plus possible grâce aux technologies du Big data (Hadoop, Spark,…). Cela explique l’explosion du volume de…

By NGOM Aida

Jul 31, 2020

Comparaison de différents formats de fichier en Big Data

Categories: Big Data, Data Engineering | Tags: Business Intelligence, Data structures, Avro, HDFS, ORC, Parquet, Traitement par lots, Big Data, CSV, JavaScript Object Notation (JSON), Kubernetes, Protocol Buffers

Dans l’univers du traitement des données, il existe différents types de formats de fichiers pour stocker vos jeu de données. Chaque format a ses propres avantages et inconvénients selon les cas d…

By NGOM Aida

Jul 23, 2020

Importer ses données dans Databricks : tables externes et Delta Lake

Categories: Data Engineering, Data Science, Formation | Tags: Parquet, AWS, Amazon S3, Azure Data Lake Storage (ADLS), Databricks, Delta Lake, Python

Au cours d’un projet d’apprentissage automatique (Machine Learning, ML), nous devons garder une trace des données test que nous utilisons. Cela est important à des fins d’audit et pour évaluer la…

May 21, 2020

Optimisation d'applicationS Spark dans Hadoop YARN

Categories: Data Engineering, Formation | Tags: Performance, Hadoop, Spark, Python

Apache Spark est un outil de traitement de données in-memory très répandu en entreprise pour traiter des problématiques Big Data. L’exécution d’une application Spark en production nécessite des…

Mar 30, 2020

MLflow tutorial : une plateforme de Machine Learning (ML) Open Source

Categories: Data Engineering, Data Science, Formation | Tags: AWS, Azure, Databricks, Deep Learning, Déploiement, Machine Learning, MLflow, MLOps, Python, Scikit-learn

Introduction et principes de MLflow Avec une puissance de calcul et un stockage de moins en moins chers et en même temps une collecte de données de plus en plus importante dans tous les domaines, de…

Mar 23, 2020

Configuration à distance et auto-indexage des pipelines Logstash

Categories: Data Engineering, Infrastructure | Tags: Docker, Elasticsearch, Kibana, Logstash, Log4j

Logstash est un puissant moteur de collecte de données qui s’intègre dans la suite Elastic (Elasticsearch - Logstash - Kibana). L’objectif de cet article est de montrer comment déployer un cluster…

Dec 13, 2019

Stage Data Science & Data Engineer - ML en production et ingestion streaming

Categories: Data Engineering, Data Science | Tags: DevOps, Flink, Hadoop, HBase, Kafka, Spark, Internship, Kubernetes, Python

Contexte L’évolution exponentielle des données a bouleversé l’industrie en redéfinissant les méthodes de stockages, de traitement et d’acheminement des données. Maitriser ces méthodes facilite…

By WORMS David

Nov 26, 2019

Insérer des lignes dans une table BigQuery avec des colonnes complexes

Categories: Cloud computing, Data Engineering | Tags: GCP, BigQuery, Schéma, SQL

Le service BigQuery de Google Cloud est une solution data warehouse conçue pour traiter d’énormes volumes de données avec un certain nombre de fonctionnalités disponibles. Parmi toutes celles-ci, nous…

Nov 22, 2019

Mise en production d'un modèle de Machine Learning

Categories: Big Data, Data Engineering, Data Science, DevOps & SRE | Tags: DevOps, Exploitation, IA, Cloud, Machine Learning, MLOps, On-premises, Schéma

“Le Machine Learning en entreprise nécessite une vision globale […] du point de vue de l’ingénierie et de la plateforme de données”, a expliqué Justin Norman lors de son intervention sur le…

Sep 30, 2019

Spark Streaming partie 4 : clustering avec Spark MLlib

Categories: Data Engineering, Data Science, Formation | Tags: Apache Spark Streaming, Spark, Big Data, Clustering, Machine Learning, Scala, Streaming

Spark MLlib est une bibliothèque Spark d’Apache offrant des implémentations performantes de divers algorithmes d’apprentissage automatique supervisés et non supervisés. Ainsi, le framework Spark peut…

Jun 27, 2019

Spark Streaming partie 3 : DevOps, outils et tests pour les applications Spark

Categories: Big Data, Data Engineering, DevOps & SRE | Tags: Apache Spark Streaming, DevOps, Enseignement et tutorial, Spark

L’indisponibilité des services entraîne des pertes financières pour les entreprises. Les applications Spark Streaming ne sont pas exempts de pannes, comme tout autre logiciel. Une application…

May 31, 2019

Spark Streaming Partie 2 : traitement d'une pipeline Spark Structured Streaming dans Hadoop

Categories: Data Engineering, Formation | Tags: Apache Spark Streaming, Spark, Python, Streaming

Spark est conçu pour traiter des données streaming de manière fluide sur un cluster Hadoop multi-nœuds, utilisant HDFS pour le stockage et YARN pour l’orchestration de tâches. Ainsi, Spark Structured…

May 28, 2019

Spark Streaming partie 1 : construction de data pipelines avec Spark Structured Streaming

Categories: Data Engineering, Formation | Tags: Apache Spark Streaming, Kafka, Spark, Big Data, Streaming

Spark Structured Streaming est un nouveau moteur de traitement stream introduit avec Apache Spark 2. Il est construit sur le moteur Spark SQL et utilise le modèle Spark DataFrame. Le moteur Structured…

Apr 18, 2019

Publier Spark SQL Dataframe et RDD avec Spark Thrift Server

Categories: Data Engineering | Tags: Thrift, JDBC, Hadoop, Hive, Spark, SQL

La nature distribuée et en-mémoire du moteur de traitement Spark en fait un excellant candidat pour exposer des données à des clients qui souhaitent des latences faibles. Les dashboards, les notebooks…

Mar 25, 2019

Apache Flink : passé, présent et futur

Categories: Data Engineering | Tags: Pipeline, Flink, Kubernetes, Machine Learning, SQL, Streaming

Apache Flink est une petite pépite méritant beaucoup plus d’attention. Plongeons nous dans son passé, son état actuel et le futur vers lequel il se dirige avec les keytones et présentations de la…

Nov 5, 2018

Ingestion de Data Lake, quelques bonnes pratiques

Categories: Big Data, Data Engineering | Tags: Gouvernance des données, HDF, Exploitation, Avro, Hive, NiFi, ORC, Spark, Data Lake, Format de fichier, Protocol Buffers, Registre, Schéma

La création d’un Data Lake demande de la rigueur et de l’expérience. Voici plusieurs bonnes pratiques autour de l’ingestion des données en batch et en flux continu que nous recommandons et mettons en…

By WORMS David

Jun 18, 2018

Apache Beam : un modèle de programmation unifié pour les pipelines de traitement de données

Categories: Data Engineering, DataWorks Summit 2018 | Tags: Apex, Beam, Pipeline, Flink, Spark

Dans cet article, nous allons passer en revue les concepts, l’histoire et le futur d’Apache Beam, qui pourrait bien devenir le nouveau standard pour la définition des pipelines de traitement de…

May 24, 2018

Quelles nouveautés pour Apache Spark 2.3 ?

Categories: Data Engineering, DataWorks Summit 2018 | Tags: Arrow, PySpark, Performance, ORC, Spark, Spark MLlib, Data Science, Docker, Kubernetes, pandas, Streaming

Plongeons nous dans les nouveautés proposées par la nouvelle distribution 2.3 d’Apache Spark. Cette article est composé de recherches et d’informations issues des présentations suivantes du DataWorks…

May 23, 2018

Executer du Python dans un workflow Oozie

Categories: Data Engineering | Tags: Oozie, Elasticsearch, Python, REST

Les workflows Oozie permettent d’utiliser plusieurs actions pour exécuter du code, cependant il peut être délicat d’exécuter du Python, nous allons voir comment faire. J’ai récemment implémenté un…

Mar 6, 2018

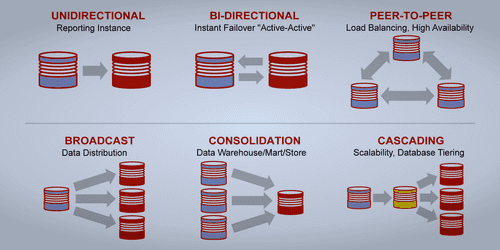

Synchronisation Oracle vers Hadoop avec un CDC

Categories: Data Engineering | Tags: CDC, GoldenGate, Oracle, Hive, Sqoop, Entrepôt de données (Data Warehouse)

Cette note résulte d’une discussion autour de la synchronisation de données écrites dans une base de données à destination d’un entrepôt stocké dans Hadoop. Merci à Claude Daub de GFI qui la rédigea…

By WORMS David

Jul 13, 2017

EclairJS - Un peu de Spark dans les Web Apps

Categories: Data Engineering, Front End | Tags: Spark, JavaScript, Jupyter

Présentation de David Fallside, IBM. Les images sont issues des slides de présentation. Introduction Le développement d’applications Web est passé d’un environnement Java vers des environnements…

By WORMS David

Jul 17, 2016

Diviser des fichiers HDFS en plusieurs tables Hive

Categories: Data Engineering | Tags: Flume, Pig, HDFS, Hive, Oozie, SQL

Je vais montrer comment scinder fichier CSV stocké dans HDFS en plusieurs tables Hive en fonction du contenu de chaque enregistrement. Le contexte est simple. Nous utilisons Flume pour collecter les…

By WORMS David

Sep 15, 2013

Stockage HDFS et Hive - comparaison des formats de fichiers et compressions

Categories: Data Engineering | Tags: Business Intelligence, Hive, ORC, Parquet, Format de fichier

Il y a quelques jours, nous avons conduit un test dans le but de comparer différents format de fichiers et méthodes de compression disponibles dans Hive. Parmi ces formats, certains sont natifs à HDFS…

By WORMS David

Mar 13, 2012

Deux Hive UDAF pour convertir une aggregation vers une map

Categories: Data Engineering | Tags: Java, HBase, Hive, Format de fichier

Je publie deux nouvelles fonctions UDAF pour Hive pour aider avec les map dans Apache Hive. Le code source est disponible sur GitHub dans deux classes Java : “UDAFToMap” et “UDAFToOrderedMap” ou vous…

By WORMS David

Mar 6, 2012