Big Data

Data, and the insight it offers, are essential for businesses to innovate and differentiate. Coming from a variety of sources, from inside the firewall out to the edge, the growth of data in terms of volume, variety, and speed leads to innovative approaches. Today, data lakes and data hub architectures allow organizations to accumulate huge reservoirs of information for future analysis. At the same time, the Cloud provides easy access to technologies to those who do not have the necessary infrastructure and Artificial Intelligence promises to proactively simplify management.

With Big Data technologies, Business Intelligence is entering a new era. Hadoop and the likes, NoSQL databases, and cloud-managed infrastructures store and represent structured and unstructured data and time series such as logs and sensors. From collect to visualization, the whole processing chain operates in batch and real-time.

Infrastructure

Cloud, on-premise, and hybrid environments:

- Integration with the information system

- Automated deployments

- End-to-end security

- Management in multi-tenant environments

- Clusters operations, disaster recovery

- Level 3 support

Data management

Governance and data provisioning:

- Big Data and Data Lake architecture

- Modeling and application architecture

- Low latency and high throughput use cases

- Batch and streaming data sourcing and ingestion pipelines

- Data cleaning and enrichment

- Data quality control and enforcement

Data intelligence

Collaboration with business units to serve projects needs:

- Restitution et visualisation de données

- Distributed traitement Optimisation des flux et des traitements distribués

- Ad hoc queries and data mining

- Machine Learning models and custom algorithms elaboration

- DevOps, SRE et MLOps

Articles related to Big Data

Introduction à OpenLineage

Categories: Big Data, Gouvernance des données, Infrastructure | Tags: Data Engineering, Infrastructure, Atlas, Data Lake, Lakehouse, Entrepôt de données (Data Warehouse), Traçabilité (data lineage)

OpenLineage est une spécification open source de lineage des données. La spécification est complétée par Marquez, son implémentation de référence. Depuis son lancement fin 2020, OpenLineage est…

Feb 19, 2024

Guide d'installation à TDP, la plateforme big data 100% open source

Categories: Big Data, Infrastructure | Tags: Infrastructure, VirtualBox, Hadoop, Vagrant, TDP

La Trunk Data Platforme (TDP) est une distribution big data 100% open source, basée sur Apache Hadoop et compatible avec HDP 3.1. Initiée en 2021 par EDF, la DGFiP et Adaltas, le projet est gouverné…

By FARAULT Paul

Oct 18, 2023

Lancement du site Internet de TDP

Categories: Big Data | Tags: Programmation, Ansible, Hadoop, Python, TDP

Le nouveau site Internet de TDP (Trunk Data Platform) est en ligne. Nous vous invitons à le parcourir pour découvrir la platorme, rester informés, et cultiver le contact avec la communauté TDP. TDP…

By WORMS David

Oct 3, 2023

CDP partie 6 : cas d'usage bout en bout d'un Data Lakehouse avec CDP

Categories: Big Data, Data Engineering, Formation | Tags: Business Intelligence, Data Engineering, Iceberg, NiFi, Spark, Big Data, Cloudera, CDP, Analyse de données, Data Lake, Entrepôt de données (Data Warehouse)

Dans cet exercice pratique, nous montrons comment construire une solution big data complète avec la Cloudera Data Platform (CDP) Public Cloud, en se basant sur l’infrastructure qui a été déployée tout…

Jul 24, 2023

CDP partie 5 : gestion des permissions utilisateurs sur CDP

Categories: Big Data, Cloud computing, Gouvernance des données | Tags: Ranger, Cloudera, CDP, Entrepôt de données (Data Warehouse)

Lorsqu’un utilisateur ou un groupe est créé dans CDP, des permissions doivent leur être attribuées pour accéder aux ressources et utiliser les Data Services. Cet article est le cinquième d’une série…

Jul 18, 2023

CDP partie 4 : gestion des utilisateurs sur CDP avec Keycloak

Categories: Big Data, Cloud computing, Gouvernance des données | Tags: EC2, Big Data, CDP, Docker Compose, Keycloak, SSO

Les articles précédents de la série couvrent le déploiement d’un environnement CDP Public Cloud. Tous les composants sont prêts à être utilisés et il est temps de mettre l’environnement à la…

Jul 4, 2023

CDP partie 3 : activation des Data Services en environnment CDP Public Cloud

Categories: Big Data, Cloud computing, Infrastructure | Tags: Infrastructure, AWS, Big Data, Cloudera, CDP

L’un des principaux arguments de vente de Cloudera Data Platform (CDP) est la maturité de son offre de services. Ceux-ci sont faciles à déployer sur site, dans le cloud public ou dans le cadre d’une…

Jun 27, 2023

CDP partie 2 : déploiement d'un environnement CDP Public Cloud sur AWS

Categories: Big Data, Cloud computing, Infrastructure | Tags: Infrastructure, AWS, Big Data, Cloud, Cloudera, CDP, Cloudera Manager

La Cloudera Data Platform (CDP) Public Cloud constitue la base sur laquelle des lacs de données (Data Lake) complets sont créés. Dans un article précédent, nous avons présenté la plateforme CDP. Cet…

Jun 19, 2023

Exigences et attentes d'une plateforme Big Data

Categories: Big Data, Infrastructure | Tags: Data Engineering, Gouvernance des données, Analyse de données, Data Hub, Data Lake, Lakehouse, Data Science

Une plateforme Big Data est un système complexe et sophistiqué qui permet aux organisations de stocker, traiter et analyser de gros volumes de données provenant de diverses sources. Elle se compose de…

By WORMS David

Mar 23, 2023

Gestion de Kafka dans Kubernetes avec Strimzi

Categories: Big Data, Orchestration de conteneurs, Infrastructure | Tags: Kafka, Big Data, Kubernetes, Open source, Streaming

Kubernetes n’est pas la première plateforme à laquelle on pense pour faire tourner des clusters Apache Kafka. En effet, la forte adhérence de Kafka au stockage pourrait être difficile à gérer par…

Mar 7, 2023

Plongée dans tdp-lib, le SDK en charge de la gestion de clusters TDP

Categories: Big Data, Infrastructure | Tags: Programmation, Ansible, Hadoop, Python, TDP

Tous les déploiements TDP sont automatisés. Ansible y joue un rôle central. Avec la complexité grandissante de notre base logicielle, un nouveau système était nécessaire afin de s’affranchir des…

Jan 24, 2023

Adaltas Summit 2022 Morzine

Categories: Big Data, Adaltas Summit 2022 | Tags: Data Engineering, Infrastructure, Iceberg, Conteneur, Lakehouse, Docker, Kubernetes

Pour sa troisième édition, toute l’équipe d’Adaltas se retrouve à Morzine pour une semaine entière avec 2 jours dédiés à la technologie les 15 et 16 septembre 2022. Les intervenants choisissent l’un…

By WORMS David

Jan 13, 2023

Stage infrastructure big data

Categories: Big Data, Data Engineering, DevOps & SRE, Infrastructure | Tags: Infrastructure, Hadoop, Big Data, Cluster, Internship, Kubernetes, TDP

Présentation de l’offre Le Big Data et l’informatique distribuée sont au cœur d’Adaltas. Nous accompagnons nos partenaires dans le déploiement, la maintenance, l’optimisation et nouvellement le…

By BAUM Stephan

Dec 2, 2022

Stockage objet Ceph dans un cluster Kubernetes avec Rook

Categories: Big Data, Gouvernance des données, Formation | Tags: Amazon S3, Big Data, Ceph, Cluster, Data Lake, Kubernetes, Storage

Ceph est un système tout-en-un de stockage distribué. Fiable et mature, sa première version stable est parue en 2012 et a été depuis la référence pour le stockage open source. L’avantage principal de…

By BIGOT Luka

Aug 4, 2022

Stockage objet avec MinIO dans un cluster Kubernetes

Categories: Big Data, Gouvernance des données, Formation | Tags: Amazon S3, Big Data, Cluster, Data Lake, Kubernetes, Storage

MinIO est une solution de stockage objet populaire. Souvent recommandé pour sa simplicité d’utilisation et d’installation, MinIO n’est pas seulement qu’un bon moyen pour débuter avec le stockage objet…

By BIGOT Luka

Jul 9, 2022

Architecture du stockage objet et attributs du standard S3

Categories: Big Data, Gouvernance des données | Tags: Base de données, API, Amazon S3, Big Data, Data Lake, Storage

Le stockage objet a gagné en popularité parmi les architectures de stockage de données. Comparé aux systèmes de fichiers et au stockage bloc, le stockage objet ne rencontre pas de limitations lorsqu…

By BIGOT Luka

Jun 20, 2022

Comparaison des architectures de base de données : data warehouse, data lake and data lakehouse

Categories: Big Data, Data Engineering | Tags: Gouvernance des données, Infrastructure, Iceberg, Parquet, Spark, Data Lake, Lakehouse, Entrepôt de données (Data Warehouse), Format de fichier

Les architectures de base de données ont fait l’objet d’une innovation constante, évoluant avec l’apparition de nouveaux cas d’utilisation, de contraintes techniques et d’exigences. Parmi les trois…

May 17, 2022

Découvrez Trunk Data Platform : La Distribution Big Data Open-Source par TOSIT

Categories: Big Data, DevOps & SRE, Infrastructure | Tags: Ranger, DevOps, Hortonworks, Ansible, Hadoop, HBase, Knox, Spark, Cloudera, CDP, CDH, Open source, TDP

Depuis la fusion de Cloudera et Hortonworks, la sélection de distributions Hadoop commerciales on-prem se réduit à CDP Private Cloud. CDP est un mélange de CDH et de HDP conservant les meilleurs…

Apr 14, 2022

Apache HBase : colocation de RegionServers

Categories: Big Data, Adaltas Summit 2021, Infrastructure | Tags: Ambari, Base de données, Infrastructure, Performance, Hadoop, HBase, Big Data, HDP, Storage

Les RegionServers sont les processus gérant le stockage et la récupération des données dans Apache HBase, la base de données non-relationnelle orientée colonne de Apache Hadoop. C’est à travers leurs…

Feb 22, 2022

Utilisation de Cloudera Deploy pour installer Cloudera Data Platform (CDP) Private Cloud

Categories: Big Data, Cloud computing | Tags: Ansible, Cloudera, CDP, Cluster, Entrepôt de données (Data Warehouse), Vagrant, IaC

Suite à notre récente présentation de CDP, passons désormais au déploiement CDP private Cloud sur votre infrastructure locale. Le deploiement est entièrement automatisé avec les cookbooks Ansible…

Jul 23, 2021

Présentation de Cloudera Data Platform (CDP)

Categories: Big Data, Cloud computing, Data Engineering | Tags: SDX, Big Data, Cloud, Cloudera, CDP, CDH, Analyse de données, Data Hub, Data Lake, Lakehouse, Entrepôt de données (Data Warehouse)

Cloudera Data Platform (CDP) est une plateforme de cloud computing pour les entreprises. CDP fournit des outils intégrés et multifonctionnels en libre-service afin d’analyser et de centraliser les…

Jul 19, 2021

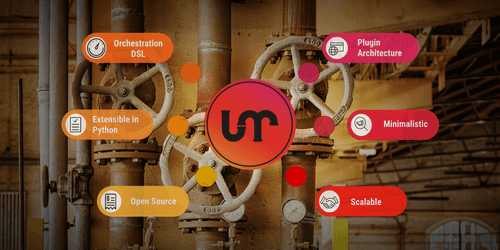

Apache Liminal, quand le MLOps rencontre le GitOps

Categories: Big Data, Orchestration de conteneurs, Data Engineering, Data Science, Tech Radar | Tags: Data Engineering, CI/CD, Data Science, Deep Learning, Déploiement, Docker, GitOps, Kubernetes, Machine Learning, MLOps, Open source, Python, TensorFlow

Apache Liminal propose une solution clés en main permettant de déployer un pipeline de Machine Learning. C’est un projet open-source, qui centralise l’ensemble des étapes nécessaires à l’entrainement…

Mar 31, 2021

TensorFlow Extended (TFX) : les composants et leurs fonctionnalités

Categories: Big Data, Data Engineering, Data Science, Formation | Tags: Beam, Data Engineering, Pipeline, CI/CD, Data Science, Deep Learning, Déploiement, Machine Learning, MLOps, Open source, Python, TensorFlow

La mise en production des modèles de Machine Learning (ML) et de Deep Learning (DL) est une tâche difficile. Il est reconnu qu’elle est plus sujette à l’échec et plus longue que la modélisation…

Mar 5, 2021

Construire votre distribution Big Data open source avec Hadoop, Hive, HBase, Spark et Zeppelin

Categories: Big Data, Infrastructure | Tags: Maven, Hadoop, HBase, Hive, Spark, Git, Versions et évolutions, TDP, Tests unitaires

L’écosystème Hadoop a donné naissance à de nombreux projets populaires tels que HBase, Spark et Hive. Bien que des technologies plus récentes commme Kubernetes et les stockages objets compatibles S…

Dec 18, 2020

Connexion à ADLS Gen2 depuis Hadoop (HDP) et NiFi (HDF)

Categories: Big Data, Cloud computing, Data Engineering | Tags: Hadoop, HDFS, NiFi, Authentification, Autorisation, Azure, Azure Data Lake Storage (ADLS), OAuth2

Alors que les projets Data construits sur le cloud deviennent de plus en plus répandus, un cas d’utilisation courant consiste à interagir avec le stockage cloud à partir d’une plateforme Big Data on…

Nov 5, 2020

Reconstruction de Hive dans HDP : patch, test et build

Categories: Big Data, Infrastructure | Tags: Maven, GitHub, Java, Hive, Git, Versions et évolutions, TDP, Tests unitaires

La distribution HDP d’Hortonworks va bientôt être dépreciée a profit de la distribution CDP proposée par Cloudera. Un client nous a demandé d’intégrer d’une nouvelle feature de Apache Hive sur son…

Oct 6, 2020

Installation d'Hadoop depuis le code source : build, patch et exécution

Categories: Big Data, Infrastructure | Tags: Maven, Java, LXD, Hadoop, HDFS, Docker, TDP, Tests unitaires

Les distributions commerciales d’Apache Hadoop ont beaucoup évolué ces dernières années. Les deux concurrents Cloudera et Hortonworks ont fusionné : HDP ne sera plus maintenu et CDH devient CDP. HP a…

Aug 4, 2020

Téléchargement de jeux de données dans HDFS et Hive

Categories: Big Data, Data Engineering | Tags: Business Intelligence, Data Engineering, Data structures, Base de données, Hadoop, HDFS, Hive, Big Data, Analyse de données, Data Lake, Lakehouse, Entrepôt de données (Data Warehouse)

Introduction De nos jours, l’analyse de larges quantités de données devient de plus en plus possible grâce aux technologies du Big data (Hadoop, Spark,…). Cela explique l’explosion du volume de…

By NGOM Aida

Jul 31, 2020

Comparaison de différents formats de fichier en Big Data

Categories: Big Data, Data Engineering | Tags: Business Intelligence, Data structures, Avro, HDFS, ORC, Parquet, Traitement par lots, Big Data, CSV, JavaScript Object Notation (JSON), Kubernetes, Protocol Buffers

Dans l’univers du traitement des données, il existe différents types de formats de fichiers pour stocker vos jeu de données. Chaque format a ses propres avantages et inconvénients selon les cas d…

By NGOM Aida

Jul 23, 2020

Automatisation d'un workflow Spark sur GCP avec GitLab

Categories: Big Data, Cloud computing, Orchestration de conteneurs | Tags: Enseignement et tutorial, Airflow, Spark, CI/CD, GitLab, GitOps, GCP, Terraform

Un workflow consiste à automiatiser une succéssion de tâche qui dont être menée indépendemment d’une intervention humaine. C’est un concept important et populaire, s’appliquant particulièrement à un…

Jun 16, 2020

Premier pas avec Apache Airflow sur AWS

Categories: Big Data, Cloud computing, Orchestration de conteneurs | Tags: PySpark, Enseignement et tutorial, Airflow, Oozie, Spark, AWS, Docker, Python

Apache Airflow offre une solution répondant au défi croissant d’un paysage de plus en plus complexe d’outils de gestion de données, de scripts et de traitements d’analyse à gérer et coordonner. C’est…

May 5, 2020

Cloudera CDP et migration Cloud de votre Data Warehouse

Categories: Big Data, Cloud computing | Tags: Azure, Cloudera, Data Hub, Data Lake, Entrepôt de données (Data Warehouse)

Alors que l’un de nos clients anticipe un passage vers le Cloud et avec l’annonce récente de la disponibilité de Cloudera CDP mi-septembre lors de la conférence Strata, il semble que le moment soit…

By WORMS David

Dec 16, 2019

Migration Big Data et Data Lake vers le Cloud

Categories: Big Data, Cloud computing | Tags: DevOps, AWS, Azure, Cloud, CDP, Databricks, GCP

Est-il impératif de suivre tendance et de migrer ses données, workflow et infrastructure vers l’un des Cloud providers tels que GCP, AWS ou Azure ? Lors de la Strata Data Conference à New-York, un…

Dec 9, 2019

Stage InfraOps & DevOps - construction d'une offre PaaS Big Data & Kubernetes

Categories: Big Data, Orchestration de conteneurs | Tags: DevOps, LXD, Hadoop, Kafka, Spark, Ceph, Internship, Kubernetes, NoSQL

Contexte L’acquisition d’un cluster à forte capacité répond à la volonté d’Adaltas de construire une offre de type PAAS pour disposer et mettre à disposition des plateformes de Big Data et d…

By WORMS David

Nov 26, 2019

Notes sur le nouveau modèle de licences Open Source de Cloudera

Categories: Big Data | Tags: CDSW, Licence, Cloudera Manager, Open source

Suite à la publication de sa stratégie de licences Open Source le 10 juillet 2019 dans un article intitulé “notre engagement envers les logiciels Open Source”, Cloudera a diffusé un webinaire hier le…

By WORMS David

Oct 25, 2019

Mise en production d'un modèle de Machine Learning

Categories: Big Data, Data Engineering, Data Science, DevOps & SRE | Tags: DevOps, Exploitation, IA, Cloud, Machine Learning, MLOps, On-premises, Schéma

“Le Machine Learning en entreprise nécessite une vision globale […] du point de vue de l’ingénierie et de la plateforme de données”, a expliqué Justin Norman lors de son intervention sur le…

Sep 30, 2019

Apache Hive 3, nouvelles fonctionnalités et conseils et astuces

Categories: Big Data, Business Intelligence, DataWorks Summit 2019 | Tags: Druid, JDBC, LLAP, Hadoop, Hive, Kafka, Versions et évolutions

Disponible depuis juillet 2018 avec HDP3 (Hortonworks Data Platform 3), Apache Hive 3 apporte de nombreuses fonctionnalités intéressantes à l’entrepôt de données. Malheureusement, comme beaucoup de…

Jul 25, 2019

Auto-scaling de Druid avec Kubernetes

Categories: Big Data, Business Intelligence, Orchestration de conteneurs | Tags: EC2, Druid, Helm, Métriques, OLAP, Exploitation, Orchestration de conteneurs, Cloud, CNCF, Analyse de données, Kubernetes, Prometheus, Python

Apache Druid est un système de stockage de données open-source destiné à l’analytics qui peut profiter des capacités d’auto-scaling de Kubernetes de par son architecture distribuée. Cet article est…

Jul 16, 2019

Intégration de Druid et Hive

Categories: Big Data, Business Intelligence, Tech Radar | Tags: Druid, LLAP, OLAP, Hive, Analyse de données, SQL

Nous allons dans cet article traiter de l’intégration entre Hive Interactive (LLAP) et Druid. Cet article est un complément à l’article Ultra-fast OLAP Analytics with Apache Hive and Druid.…

Jun 17, 2019

Spark Streaming partie 3 : DevOps, outils et tests pour les applications Spark

Categories: Big Data, Data Engineering, DevOps & SRE | Tags: Apache Spark Streaming, DevOps, Enseignement et tutorial, Spark

L’indisponibilité des services entraîne des pertes financières pour les entreprises. Les applications Spark Streaming ne sont pas exempts de pannes, comme tout autre logiciel. Une application…

May 31, 2019

Apache Knox, c'est facile !

Categories: Big Data, Cybersécurité, Adaltas Summit 2018 | Tags: Ranger, LDAP, Active Directory, Knox, Kerberos, REST

Apache Knox est le point d’entrée sécurisé d’un cluster Hadoop, mais peut-il être également le point d’entrée de mes applications REST ? Vue d’ensemble d’Apache Knox Apache Knox est une passerelle…

Feb 4, 2019

Prise de contrôle d'un cluster Hadoop avec Apache Ambari

Categories: Big Data, DevOps & SRE, Adaltas Summit 2018 | Tags: Ambari, Automation, iptables, Nikita, Systemd, Cluster, HDP, Kerberos, Noeud, Node.js, REST

Nous avons récemment migré un large cluster Hadoop de production installé “manuellement” vers Apache Ambari. Nous avons nommé cette opération “Ambari Takeover”. C’est un processus à risque et nous…

Nov 15, 2018

Déploiement d'un cluster Flink sécurisé sur Kubernetes

Categories: Big Data | Tags: Chiffrement, Flink, HDFS, Kafka, Elasticsearch, Kerberos, SSL/TLS

Le déploiement sécurisée d’une application Flink dans Kubernetes, entraine deux options. En supposant que votre Kubernetes est sécurisé, vous pouvez compter sur la plateforme sous-jacente ou utiliser…

By WORMS David

Oct 8, 2018

Migration de cluster et de traitements entre Hadoop 2 et 3

Categories: Big Data, Infrastructure | Tags: Shiro, Erasure Coding, Rolling Upgrade, HDFS, Spark, YARN, Docker

La migration de Hadoop 2 vers Hadoop 3 est un sujet brûlant. Comment mettre à niveau vos clusters, quelles fonctionnalités présentes dans la nouvelle version peuvent résoudre les problèmes actuels et…

Jul 25, 2018

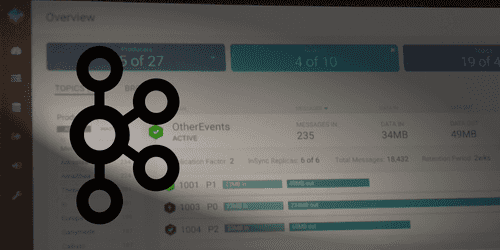

Remède à l'aveuglement de Kafka

Categories: Big Data | Tags: Ambari, Ranger, Hortonworks, HDF, JMX, UI, Kafka, HDP

Il est difficile de visualiser pour les développeurs, opérateurs et manageurs, ce qui se cache à l’intérieur des entrailles de Kafka. Cet article parle d’une nouvelle interface graphique bientôt…

Jun 20, 2018

Ingestion de Data Lake, quelques bonnes pratiques

Categories: Big Data, Data Engineering | Tags: Gouvernance des données, HDF, Exploitation, Avro, Hive, NiFi, ORC, Spark, Data Lake, Format de fichier, Protocol Buffers, Registre, Schéma

La création d’un Data Lake demande de la rigueur et de l’expérience. Voici plusieurs bonnes pratiques autour de l’ingestion des données en batch et en flux continu que nous recommandons et mettons en…

By WORMS David

Jun 18, 2018

Apache Hadoop YARN 3.0 - État de l'art

Categories: Big Data, DataWorks Summit 2018 | Tags: GPU, Hortonworks, Hadoop, HDFS, MapReduce, YARN, Cloudera, Data Science, Docker, Versions et évolutions

Cet article couvre la présentation ”Apache Hadoop YARN: state of the union” (YARN état de l’art) par Wangda Tan d’Hortonworks lors de la conférence DataWorks Summit 2018 Berlin (anciennement Hadoop…

May 31, 2018

Exécuter des workloads d'entreprise dans le Cloud avec Cloudbreak

Categories: Big Data, Cloud computing, DataWorks Summit 2018 | Tags: Cloudbreak, Exploitation, Hadoop, AWS, Azure, GCP, HDP, OpenStack

Cet article se base sur la conférence de Peter Darvasi et Richard Doktorics “Running Enterprise Workloads in the Cloud” au DataWorks Summit 2018 à Berlin. Il présentera l’outil de déploiement…

May 28, 2018

Omid : Traitement de transactions scalables et hautement disponibles pour Apache Phoenix

Categories: Big Data, DataWorks Summit 2018 | Tags: Omid, Phoenix, Transaction, ACID, HBase, SQL

Apache Omid fournit une couche transactionnelle au-dessus des bases de données clés/valeurs NoSQL. Crédits à Ohad Shacham pour son discours et son travail pour Apache Omid. Cet article est le résultat…

May 24, 2018

Le futur de l'orchestration de workflows dans Hadoop : Oozie 5.x

Categories: Big Data, DataWorks Summit 2018 | Tags: Hadoop, Hive, Oozie, Sqoop, CDH, HDP, REST

Au DataWorks Summit Europe 2018 de Berlin, j’ai eu l’occasion d’assister à une session sur Apache Oozie. La présentation se concentre sur les caractéristiques du prochain Oozie 5.0 et celles à venir…

May 23, 2018

Questions essentielles sur les base de données Time Series

Categories: Big Data | Tags: Druid, HBase, Hive, ORC, Data Science, Elasticsearch, Grafana, IOT

Aujourd’hui, le gros des données Big Data est de nature temporelle. On le constate dans les médias comme chez nos clients : compteurs intelligents, transactions bancaires, usines intelligentes,…

By WORMS David

Mar 18, 2018

Ambari - Comment utiliser les blueprints

Categories: Big Data, DevOps & SRE | Tags: Ambari, Ranger, Automation, DevOps, Exploitation, REST

En tant qu’ingénieurs d’infrastructure chez Adaltas, nous déployons des clusters. Beaucoup de clusters. Généralement, nos clients choisissent d’utiliser une distribution telle que Hortonworks HDP ou…

Jan 17, 2018

Cloudera Sessions Paris 2017

Categories: Big Data, Évènements | Tags: EC2, Altus, CDSW, SDX, Azure, Cloudera, CDH, Data Science, PaaS

Adaltas était présent le 5 octobre aux Cloudera Sessions, la journée de présentation des nouveaux produits Cloudera. Voici un compte rendu de ce que nous avons pu voir. Note : les informations ont été…

Oct 16, 2017

Changer la couleur de la topbar d'Ambari

Categories: Big Data, Hack | Tags: Ambari, Front-end

J’étais récemment chez un client qui a plusieurs environnements (Prod, Integration, Recette, …) sur HDP avec chacun son instance Ambari. L’une des questions soulevée par le client est la suivante…

Jul 9, 2017

MiNiFi : Scalabilité de la donnée & de l'intérêt de commencer petit

Categories: Big Data, DevOps & SRE, Infrastructure | Tags: MiNiFi, C++, HDF, NiFi, Cloudera, HDP, IOT

Aldrin nous a rapidement présenté Apache NiFi puis expliqué d’où est venu MiNiFi : un agent NiFi à déployer sur un embarqué afin d’amener la donnée à pipeline d’un cluster NiFi (ex : IoT). Ce poste…

Jul 8, 2017

Administration Hadoop multitenant avancée - protection de Zookeeper

Categories: Big Data, Infrastructure | Tags: DoS, iptables, Exploitation, Passage à l'échelle, Zookeeper, Clustering, Consensus

Zookeeper est un composant critique au fonctionnement d’Hadoop en haute disponibilité. Ce dernier se protège en limitant le nombre de connexions max (maxConns=400). Cependant Zookeeper ne se protège…

Jul 5, 2017

Supervision de clusters HDP

Categories: Big Data, DevOps & SRE, Infrastructure | Tags: Alert, Ambari, Métriques, Supervision, HDP, REST

Avec la croissance actuelle des technologies BigData, de plus en plus d’entreprises construisent leurs propres clusters dans l’espoir de valoriser leurs données. L’une des principales préoccupations…

Jul 5, 2017

Hive Metastore HA avec DBTokenStore : Failed to initialize master key

Categories: Big Data, DevOps & SRE | Tags: Infrastructure, Hive, Bug

Cet article décrit ma petite aventure autour d’une erreur au démarrage du Hive Metastore. Elle se reproduit dans un environnement précis qui est celui d’une installation sécurisée, entendre avec…

By WORMS David

Jul 21, 2016

Maitrisez vos workflows avec Apache Airflow

Categories: Big Data, Tech Radar | Tags: DevOps, Airflow, Cloud, Python

Ci-dessous une compilation de mes notes prises lors de la présentation d’Apache Airflow par Christian Trebing de chez BlueYonder. Introduction Use case : comment traiter des données arrivant…

Jul 17, 2016

Hive, Calcite et Druid

Categories: Big Data | Tags: Druid, Business Intelligence, Base de données, Hadoop, Hive

BI/OLAP est nécessaire à la visualisation interactive de flux de données : Évènements issus d’enchères en temps réel Flux d’activité utilisateur Log de téléphonie Suivi du trafic réseau Évènements de…

By WORMS David

Jul 14, 2016

L'offre Red Hat Storage et son intégration avec Hadoop

Categories: Big Data | Tags: GlusterFS, Red Hat, Hadoop, HDFS, Storage

J’ai eu l’occasion d’être introduit à Red Hat Storage et Gluster lors d’une présentation menée conjointement par Red Hat France et la société StartX. J’ai ici recompilé mes notes, du moins…

By WORMS David

Jul 3, 2015

Installation d'Hadoop et d'HBase sous OSX en mode pseudo-distribué

Categories: Big Data, Formation | Tags: Hue, Infrastructure, Hadoop, HBase, Big Data, Déploiement

Le système d’exploitation choisi est OSX mais la procédure n’est pas si différente pour tout environnement Unix car l’essentiel des logiciels est téléchargé depuis Internet, décompressé et paramétré…

By WORMS David

Dec 1, 2010

Stockage et traitement massif avec Hadoop

Categories: Big Data | Tags: Hadoop, HDFS, Storage

Apache Hadoop est un système pour construire des infrastructures de stockage partagé et d’analyses adaptées à des volumes larges (plusieurs terabytes ou petabytes). Les clusters Hadoop sont utilisés…

By WORMS David

Nov 26, 2010

Stockage et traitement massif avec Hadoop

Categories: Big Data, Node.js | Tags: HBase, Big Data, Node.js, REST

HBase est la base de données de type “column familly” de l’écosystème Hadoop construite sur le modèle de Google BigTable. HBase peut accueillir de très larges volumes de données (de l’ordre du tera ou…

By WORMS David

Nov 1, 2010

Présentation de MapReduce

Categories: Big Data | Tags: Java, MapReduce, Big Data, JavaScript

Les systèmes d’information ont de plus en plus de données à stocker et à traiter. Des entreprises comme Google, Facebook, Twitter mais encore bien d’autre stockent des quantités d’information…

By WORMS David

Jun 26, 2010