Data Governance

Data governance represents a set of procedures to ensure important data are formally managed through the company.

It provides trust in the datasets as well as user responsibility in case of low data quality. This is of particular importance inside a Big Data platform fully integrated inside the company where multiple dataset, multiple treatments and multiple users coexist.

Organization, responsibilities

The right organization for the people eases the communication and the comprehension between teams while promoting an agile and data-centric culture. The concept of a single point of accountability is a major principle to achieve an effective project governance and it establishes new responsibilities (Data Council, Data Steward…).

Authorization/ACL

Each components of the cluster offer by nature their own rules for access control. Each of the components of the cluster inherently has its own access control mechanisms. Fine grained access rules on a file system are not managed the same way as the one of a relational database. These rules can be based on roles (RBAC), on tags or even on the geolocation of IP address

Authentification, Identification

Identity management includes user information and their existence, their group membership and the management rules applied to them. It is shared accross the company with the integration of the target platform to the company's LDAP server or its Active Directory.

Naming

The company is responsible to define a set of naming rules to ensure the integrity and the coherence of the system. The purpose is to guaranty to business and technical users the comprehension of names while enforcing coherent conventions, structures and names. Attribution of names must: be meaningful, be comprehensible without external explanations, reflects the targeted resource usages, differentiates itself from other names as much as possible, maximizes full name when possible, uses the same abbreviation, be singular.

Metadata, Data Lineage

The usage of tags enables the traceability of the data accross its data lifecycle: collect, qualification, enrichment, consumption. This process inform about where does the data come from, where it went through, who are the people or the application who access it and how was it altered. Having all those information systematically collected allows for data classification, user and application behavior captur, follow and analyse data related actions, ensure the respect usage according to the security policies in place.

Data Quality

Data qualification is the responsibility of the development teams. Unique interlocutor must be identified to be accountable and endorse responsibilities. It is crucial to constituate a readable responsiblity chain in which roles are not shared. Teams can rely on an existing toolset to validate and apply the relevant schema to each and every record. Moreover, the core components must prevent against a potential corruption of the data at rest and in motion.

Ressources allocation

Inside a multi-tenant environment, YARN carries the responsibility to ensure the availability of allocated resources to its users and groups of users. The resources traditionally managed by YARN are the memory and the CPU. Lately, the latest evolution of YARN reports the management of the network and disks. Through its ownership, process execution is associated to scheduling queues with a dedicated amount of cluster resources. Yarn enforces the disponibility of allocated resources for each user.

Data lifecycle

Information Lifecycle Management (ILM) encompasses the overall collect and traitment chain. It purposes is to plan the processing of data accross one or several clusters, to store and archive data while securing and preserving retention time.

Articles related to gouvernance

Introduction à OpenLineage

Categories: Big Data, Gouvernance des données, Infrastructure | Tags: Data Engineering, Infrastructure, Atlas, Data Lake, Lakehouse, Entrepôt de données (Data Warehouse), Traçabilité (data lineage)

OpenLineage est une spécification open source de lineage des données. La spécification est complétée par Marquez, son implémentation de référence. Depuis son lancement fin 2020, OpenLineage est…

Feb 19, 2024

CDP partie 5 : gestion des permissions utilisateurs sur CDP

Categories: Big Data, Cloud computing, Gouvernance des données | Tags: Ranger, Cloudera, CDP, Entrepôt de données (Data Warehouse)

Lorsqu’un utilisateur ou un groupe est créé dans CDP, des permissions doivent leur être attribuées pour accéder aux ressources et utiliser les Data Services. Cet article est le cinquième d’une série…

Jul 18, 2023

CDP partie 4 : gestion des utilisateurs sur CDP avec Keycloak

Categories: Big Data, Cloud computing, Gouvernance des données | Tags: EC2, Big Data, CDP, Docker Compose, Keycloak, SSO

Les articles précédents de la série couvrent le déploiement d’un environnement CDP Public Cloud. Tous les composants sont prêts à être utilisés et il est temps de mettre l’environnement à la…

Jul 4, 2023

Stockage objet Ceph dans un cluster Kubernetes avec Rook

Categories: Big Data, Gouvernance des données, Formation | Tags: Amazon S3, Big Data, Ceph, Cluster, Data Lake, Kubernetes, Storage

Ceph est un système tout-en-un de stockage distribué. Fiable et mature, sa première version stable est parue en 2012 et a été depuis la référence pour le stockage open source. L’avantage principal de…

By BIGOT Luka

Aug 4, 2022

Stockage objet avec MinIO dans un cluster Kubernetes

Categories: Big Data, Gouvernance des données, Formation | Tags: Amazon S3, Big Data, Cluster, Data Lake, Kubernetes, Storage

MinIO est une solution de stockage objet populaire. Souvent recommandé pour sa simplicité d’utilisation et d’installation, MinIO n’est pas seulement qu’un bon moyen pour débuter avec le stockage objet…

By BIGOT Luka

Jul 9, 2022

Architecture du stockage objet et attributs du standard S3

Categories: Big Data, Gouvernance des données | Tags: Base de données, API, Amazon S3, Big Data, Data Lake, Storage

Le stockage objet a gagné en popularité parmi les architectures de stockage de données. Comparé aux systèmes de fichiers et au stockage bloc, le stockage objet ne rencontre pas de limitations lorsqu…

By BIGOT Luka

Jun 20, 2022

Sécurisation des services avec Open Policy Agent

Categories: Cybersécurité, Gouvernance des données | Tags: Ranger, Kafka, Autorisation, Cloud, Kubernetes, REST, SSL/TLS

Open Policy Agent est un un moteur de règles multifonction. L’objectif principal du projet est de centraliser l’application de règles de sécurité à travers la stack cloud native. Le projet a été crée…

Jan 22, 2020

Innovation, culture projet vs culture produit en Data Science

Categories: Data Science, Gouvernance des données | Tags: DevOps, Agile, Scrum

La Data Science porte en elle le métier de demain. Elle est étroitement liée à la compréhension du métier, des comportements et de l’intelligence qu’on tirera des données existantes. Les enjeux sont à…

By WORMS David

Oct 8, 2019

Utilisateurs et autorisations RBAC dans Kubernetes

Categories: Orchestration de conteneurs, Gouvernance des données | Tags: Cybersécurité, RBAC, Authentification, Autorisation, Kubernetes, SSL/TLS

Le déploiement d’un cluster Kubernetes n’est que le début de votre parcours et vous devez maintenant l’exploiter. Pour sécuriser son accès, les identités des utilisateurs doivent être déclarées avec…

Aug 7, 2019

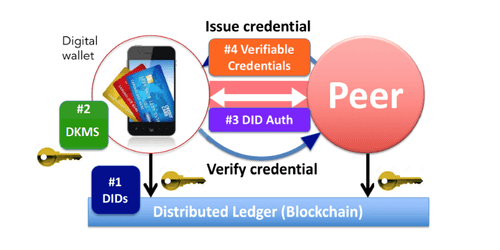

Les identités auto-souveraines

Categories: Gouvernance des données | Tags: Authentification, Blockchain, Cloud, IAM, Livre de compte (ledger)

Vers une identité numérique fiable, personnelle, persistante et portable pour tous. Problèmes d’identité numérique Les identités auto-souveraines sont une tentative de redéfinir le notion d’identité…

By MELLAL Nabil

Jan 23, 2019

Gestion des identités utilisateurs sur clusters Big Data

Categories: Cybersécurité, Gouvernance des données | Tags: LDAP, Active Directory, Ansible, FreeIPA, IAM, Kerberos

La sécurisation d’un cluster Big Data implique l’intégration ou le déploiement de services spécifiques pour stocker les utilisateurs. Certains utilisateurs sont spécifiques à un cluster lorsque d…

By WORMS David

Nov 8, 2018