An overview of Cloudera Data Platform (CDP)

Jul 19, 2021

Never miss our publications about Open Source, big data and distributed systems, low frequency of one email every two months.

Cloudera Data Platform (CDP) is a cloud computing platform for businesses. It provides integrated and multifunctional self-service tools in order to analyze and centralize data. It brings security and governance at the corporate level, all of which hosted on public, private and multi cloud deployments. CDP is the successor to Cloudera’s two previous Hadoop distributions: Cloudera Distribution of Hadoop (CDH) and Hortonworks Data Platform (HDP). In this article, we dive into the new Cloudera Big Data offering and how it differs from its predecessors.

Overview

CDP features a unique public-private approach, real-time data analytics, scalable on-premise/on-cloud and hybrid cloud deployment options, and a privacy-first architecture. According to its official website, CDP enables you to:

- Automatically generate workloads when necessary and suspend their operation when finished, and as a result controlling the cloud costs

- Use analytics and Machine Learning to optimize workloads

- Display data lineage of all cloud and transient clusters

- Use a single pane of glass through hybrid and multi-clouds

- Scale to petabytes of data and thousands of miscellaneous users

- Use multi-cloud and hybrid environments to centralize the control of customer and operational data

CDP is available in two editions: CDP Public Cloud and CDP Private Cloud.

CDP Public Cloud

CDP Public Cloud is a Platform-as-a-Service (PaaS) which is compatible with a cloud infrastructure and transferable without difficulty between various cloud providers including private solutions like OpenShift. CDP was built to be completely hybrid as well as multi-cloud, meaning that one platform can handle all data lifecycle use cases, regardless of location or cloud, with a consistent security and governance model. CDP may work with data in a variety of settings, including public clouds such as AWS, Azure, and GCP. Furthermore, it can automatically scale workloads and resources up and down in order to enhance performance and lower costs.

CDP Public Cloud services

Here are the main elements that make up the CDP Public Cloud:

-

Data Engineering

CDP Data Engineering is an all-in-one Data Engineering toolkit. Built on Apache Spark, it allows to streamline ETL processes across enterprise analytics teams by enabling orchestration and automation with Apache Airflow and provides highly-developed pipeline monitoring, visual debugging, and extensive management tools. It has isolated workload environments and is containerized, scalable, and easy to transport.

-

Data Hub

CDP Data Hub is a service that enables high-value analytics from the Edge to AI. Streaming, ETL, data marts, databases, and Machine Learning are just a few of the tasks covered among the wide range of analytical workloads.

-

Data Warehouse

CDP Data Warehouse is a service that allows IT to provide a cloud-native self-service analytic experience to BI analysts. Streaming, Data Engineering, and Machine Learning (ML) analytics are all completely integrated within CDP Data Warehouse. It features a unified framework which enables to secure and govern all of your data and metadata on private, multiple public or hybrid clouds.

-

Machine Learning

CDP Machine Learning optimizes ML workflows by using native and comprehensive tools for deploying, serving, and monitoring models. With expanded Cloudera Shared Data Experience (SDX) for models, it regulates and automates model categorization, and then easily transfers findings to collaborate via CDP experiences such as Data Warehouse and Operational Database.

-

Data Visualization

With Cloudera Data Visualization, users can model data in the virtual data warehouse without having to remove or update underlying data structures or tables, and query large amounts of data without having to constantly load data, therefore saving time and money.

-

Operational Database

Cloudera Operational Database experience is a managed solution that summarizes the underlying cluster instance as a Database. It will automatically scale based on the workload use of the cluster, and it will be able to enhance performance within the same infrastructure footprint and automatically resolve operational issues.

Architecture

In this section, we present all of the services available on CDP Public Cloud. The components featured here can be used independently or as a whole.

- Data Hub

- Management Console: service used by CDP administrators to manage environments, users, and services

- Data Warehouse

- Database Catalogs: A logical collection of metadata definitions for managed data, as well as the data context that goes with it

- Virtual Warehouses: An instance of compute resources which equates to a cluster

- Machine Learning: Mobilize workspaces for Machine Learning

- Data Engineering (CDE is currently available only on Amazon AWS)

- Environment: A logical subset of your cloud provider account that includes a particular virtual network

- CDE Service: The long-running Kubernetes cluster and services that manage the virtual clusters

- Virtual Cluster: An individual self-scaling cluster with its own CPU and memory ranges

- Job: Application code, as well as specified configurations and resources

- Resource: A defined set of files that are necessary for a job

- Security and governance

- Data Catalog: understand, manage, secure, and govern data assets

- WorkLoad Manager: offers insights to help you better understand the workloads you send to clusters managed by Cloudera Manager.

- Replication Manager: service to copy and migrate data from CDH clusters to CDP Public Cloud.

- HDFS replication

- Hive metadata replication

- Hive external table replication

- Table-level replication

CDP Private Cloud

CDP Private Cloud is designed for hybrid cloud deployment, enabling on-premises environments to connect to public clouds while maintaining consistent, integrated security and governance. Computing and storage are decoupled in the CDP Private Cloud, enabling clusters of these two to scale independently. Available on a CDP Private Cloud Base cluster, Cloudera Shared Data Experience (SDX) delivers unified security, governance, but also metadata management. CDP Private Cloud users can swiftly supply and deploy Cloudera Data Warehousing and Cloudera Machine Learning services, but also scale them in and out as needed, using the Management Console.

CDP Private Cloud services

Some of the components of the CDP Public Cloud, such as Machine Learning and Data Warehouse, are available on CDP Private Cloud. Besides, it uses a collection of analytic engines covering streaming, Data Engineering, data marts, operational database, and Data Science, in order to support traditional workloads.

Architecture

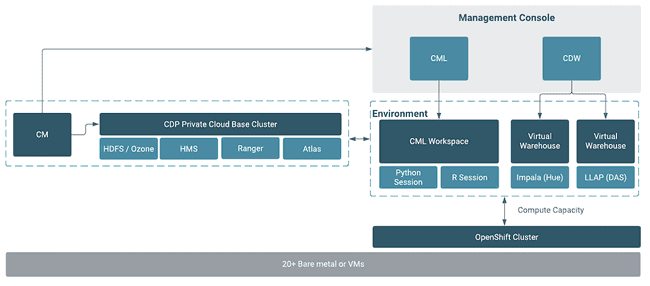

In this section, we present various services and components available for the Private Cloud. Unlike in the Public Cloud offer, the components are much more flexible since the user has more control over the cluster deployment.

Cloudera Private Cloud architecture (provided by Cloudera, Inc.)

- CDP PVC Base

- Cloudera Manager

- Hadoop

- Storage, databases

- Streaming

- Kafka: streaming message platform

- Stream Messaging Manager (SMM): operations monitoring and management tool that provides end-to-end visibility in an enterprise Apache Kafka environment.

- Stream Replication Manager (SRM): replication solution at a corporate level for fault tolerant, scalable and robust cross-cluster Kafka topic replication

- Query

- UI

- Hue: SQL Assistant for querying databases & data warehouses and collaborating

- Zeppelin: a web interface to easily analyze and format large volumes of data processed via Spark

- Data Analytics Studio (DAS): application which provides diagnostic tools and clever recommendations to help Business Analysts become more self-sufficient and productive with Hive

- Security, administration

- CDP PVC Plus

- OpenShift: deploying projects in containers

- Experiences

- Datawarehouse: self-service system construction of self-contained data warehouses and data marts that automatically scale up and down in response to changing workload demands

- Machine Learning: deploying Machine Learning workspaces

- Cloudera Data Science Workbench (CDSW): platform which enables Data Scientists to manage their own analytics pipelines

- Cloudera Flow Management (CFM)

- NiFi: automate data movements between different systems

Benefits of CDP Private Cloud

- Flexibility — your organization’s cloud environment can be tailored to meet specific business requirements.

- Control — Higher levels of control and privacy due to non-shared resources.

- Scalability — private clouds often provide higher scalability, when compared to on-premises infrastructure.

Conclusion

Cloudera Data Platform (CDP) gives you the most versatility when it comes to building and maintaining a cloud-based production data warehouse which makes it simple to migrate data to the cloud and run the data warehouse in production. They both depend on the Shared Data Experience (SDX), which is in charge of security and governance. Overall, it’s an adequate solution for organizations that need a reliable scalable and secure cloud environment. It gives the flexiblity to chose between private and public cloud, which both come with their own benefits.