How to build your OCI images using Buildpacks

Jan 9, 2023

Never miss our publications about Open Source, big data and distributed systems, low frequency of one email every two months.

Docker has become the new standard for building your application. In a Docker image we place our source code, its dependencies, some configurations and our application is almost ready to be deployed on our workstation or on our production, either in the cloud or on premises. For several years, Docker has been eclipsed by the open-source standard OCI (Open Container Initiative). Today it is not even necessary to use a Dockerfile to build our applications! Let’s have a look at what Buildpacks offers in this respect, but first we need to understand what an OCI image really is.

OCI Layers

Let’s take a basic Node.js application as an example:

myapp

├── package.json

└── src

└── index.jsTo containerize our application, we usually write a Dockerfile. It contains the necessary instructions to build an environment that will be used to run our application.

FROM node:16

WORKDIR /app

# We add our code to our future image

COPY package.json /app/package.json

COPY src/ /app/src

# We launch npm which will install the Node.js dependencies of our application

RUN npm install

CMD 'npm start'Once built, we can inspect our image with docker inspect: (selected pieces)

(...)

"Config": {

"Hostname": "",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"NODE_VERSION=16.17.0",

"YARN_VERSION=1.22.19"

],

"Cmd": [

"/bin/sh",

"-c",

"npm start"

],

"Image": "sha256:ca5108589bcee5007319db215f0f22048fb7b75d4d6c16e6310ef044c58218c0",

"Volumes": null,

"WorkingDir": "/app",

"Entrypoint": [

"docker-entrypoint.sh"

],

"OnBuild": null,

"Labels": null

},

(...)

"Type": "layers",

"Layers": [

"sha256:20833a96725ec17c9ab15711400e43781a7b7fe31d23fc6c78a7cca478935d33",

"sha256:07b905e91599cd0251bd575fb77d0918cd4f40b64fa7ea50ff82806c60e2ba61",

"sha256:5cbe2d191b8f91d974fbba38b5ddcbd5d4882e715a9e008061ab6d2ec432ef7b",

"sha256:47b6660a2b9bb2091a980c361a1c15b2a391c530877961877138fffc50d2f6f7",

"sha256:1e69483976e43c657172c073712c3d741245f9141fb560db5af7704aee95114c",

"sha256:a51886a0928017dc307b85b85c6fb3f9d727b5204ea944117ce59c4b4acc5e05",

"sha256:ba9804f7abedf8ddea3228539ae12b90966272d6eb02fd2c57446e44d32f1b70",

"sha256:c77311ff502e318598cc7b6c03a5bd25182f7f6f0352d9b68fad82ed7b4e8c26",

"sha256:93a6676fffe0813e4ca03cae4769300b539b66234e95be66d86c3ac7748c1321",

"sha256:3cf3c6f03984f8a31c0181feb75ac056fc2bd56ef8282af9a72dafd1b6bb0c41",

"sha256:02dacaf7071cc5792c28a4cf54141b7058ee492c93f04176f8f3f090c42735eb",

"sha256:85152f012a08f63dfaf306c01ac382c1962871bf1864b357549899ec2fa7385d",

"sha256:8ceb0bd5afef8a5fa451f89273974732cd0c89dac2c80ff8b7855531710fbc49"

]

(...)We can see a configuration block with:

- The environment variables

- The entrypoint command, the default command

- The working directory

- The user of the image

And a second block “Layers” with a list of checksums. Each checksum corresponds to a compressed archive file (.tar.gz). All these layers applied on top of each other build a complete filesystem. To learn more about this topic I invite you to read David’s article.

How Docker builds an images

To understand how Docker builds an image, it is necessary to know the docker commit command. This command runs on a running container. It creates an image from the state of this container.

This mechanism is used for example in our RUN npm install instruction:

- An intermediate container is started by Docker.

- In this container, we launch the command

npm install. - Once the command is finished, Docker commits the container, the difference between the previous image allows to obtain an additional layer and thus a new intermediate image.

However, docker build has one important drawback: by default, the build is not reproducible. Two successive docker builds, with the same Dockerfile, will not necessarily produce the same layers, and therefore the same checksums. What will cause this phenomenon is mostly timestamps. Each file in a standard Linux filesystem will have a creation date, a last modification date and a last access date. Also, the image has a timestamp that is embedded in the image and alters the checksum. This causes great difficulty in isolating our different layers, and it is difficult to logically associate an operation in our Dockerfile with a layer in our final image.

For the modification of the base image (the FROM instruction, for security reasons for example), Docker necessarily launches a complete build. Also, uploading this update to our registry implies sending all the layers. Depending on the size of our image, this can lead to a lot of traffic on each of our machines hosting our image and wishing to update.

An image is simply a stack of layers on top of each other, along with configuration files. However Docker (and its Dockerfile) is not the only way to build an image. Buildpacks offers a different principle for building images. Buildpacks is a project incubated at the Cloud Native Computing Foundation.

What is a buildpack?

A buildpack is a set of executable scripts that will allow you to build and launch your application.

A buildpack is made of 3 components:

- buildpack.toml: the metadata of your buildpack

- bin/detect: script that determines if the buildpack applies to your application

- bin/build: script launching the build sequence of the application

Building your application means ‘running’ buildpacks one after the other.

To do this we use a builder. A builder is an image including a whole set of buildpacks, a lifecycle and a reference to a very light run image in which we will integrate our application. The build image/run image couple is called a stack.

Coming back to our Node.js application, Buildpack will only use the information in the package.json.

{

"name": "myapp",

"version": "0.1",

"main": "index.js",

"license": "MIT",

"dependencies": {

"express": "^4.18.1"

},

"scripts": {

"start": "node src/index.js"

},

"engines": {

"node": "16"

}

}This is all we need to build an image containing our application with buildpacks:

- The base image (the

FROMof the Dockerfile) will be the one specified in the stack - The buildpacks lifecycle detects a

package.jsonand thus starts the installation process of Node.js and the dependencies. - The version of node will be the version specified in the

package.json. - The default command will be

node src/index.jsbecause it is thestartcommand of thepackage.json.

The only command you need to know to use buildpacks is the following:

pack build myapp --builder gcr.io/buildpacks/builder:v1

Here we use the builder provided by Google ‘gcr.io/buildpacks/builder:v1’. Other builders are available (see pack stack suggest) or you can build your own!

It is then directly possible to launch our application.

$ docker run myapp

> myapp@0.0.1 start

> node src/index.js

Example app listening on port 3000The advantages of using buildpacks include:

- The absence of a Dockerfile. The developer can concentrate on his code only.

- The respect of good image building practices, in terms of security, limitation of the number and size of layers. This is the responsibility of the buildpack designer, not the developer.

- Caching is native, whether it’s the binaries to be installed or the software libraries.

- Uses your organization’s best practices if you create your own buildpack (use of internal mirrors, code analysis…)

- Each layer of the final image is logically linked with the dependencies it brings.

- Each operation of a buildpack touches only a restricted area (a folder with the name of the buildpack in the run image)

- Buildpacks allows to obtain reproducible builds with less effort.

- The files copied into the image all have a timestamp with a value of 0.

- It is however necessary that the intermediate build steps (e.g. compilation of Java code) must be idempotent.

- This allows to launch an operation that buildpacks calls ‘rebase’.

Image rebasing with Buildpacks

Image rebasing consists in being able to exchange several layers of an image, without having to modify the upper layers. This is particularly relevant when modifying the base image of our run image. Let’s take an example of a Node.js application (which I’m simplifying roughly)

At build time, Buildpacks relies on the lightest possible run image, adding layer after layer:

- A layer where the node binaries are located

- A layer where the

node_modules(dependencies) of our application are located

Note that the npm binary is not needed in our run image. It will not be included, but it is used in our build image to install the node_modules which will be integrated in our run image.

In the event that our container is deployed in Kubernetes and a flaw is detected in its base image, it is necessary to update it. With Docker this would require rebuilding our image completely, uploading our entire new image to our registry, and each Kube worker would have to download this new image.

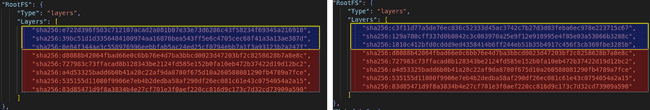

This is not necessary with Buildpacks, only the layers related to the base image need to be uploaded. Here we can compare two OCI images, one with a ubuntu:18.04 version, the other with ubuntu:20.04. The first 3 layers (in blue) are the Ubuntu related layers. The next layers (in red), are the layers added by Buildpacks: these are identical. This behavior is possible in Docker (but complex to set up), it is by default with Buildpacks.

Restrictions

Buildpacks comes with one important limitation: a buildpack can only act on a very limited area of the file system. Libraries and binaries must be installed in the folder of the buildpack that installs them. This allows a clear separation of the perimeters of each buildpack but requires more rigor. It is therefore no longer possible to simply launch installations via the package manager (apt, yum or apk). If it is not possible to bypass this limitation, it is necessary to modify the stack image.

Conclusion

If you want to easily implement good image creation practices for your containerized applications, Buildpacks is an excellent choice to consider. It easily integrates with your CI/CD via projects like kpack. It may even already be integrated into your DevOps infrastructure as Buildpacks is the one behind Gitlab’s Auto DevOps feature.