MinIO object storage within a Kubernetes cluster

By Luka BIGOT

Jul 9, 2022

Never miss our publications about Open Source, big data and distributed systems, low frequency of one email every two months.

MinIO is a popular object storage solution. Often recommended for its simple setup and ease of use, it is not only a great way to get started with object storage: it also provides excellent performance, being as suitable for beginners as it is for production.

MinIO implements the object storage data architecture, which is scalable and simple in its implementation and uses. All object storage solutions are federated under the S3 interface open standard, and as a result, different S3-compliant object storage solutions work with various S3-compatible applications.

Our presentation of object storage architecture states that object storage solutions are not limited to the cloud: hosting them within a local cluster is possible. Hosting object storage locally is a good way to understand how it works both in its structure and usage, either for development and testing purposes or while aiming to deploy it in production.

This article aims to cover MinIO’s setup and usage in an on-premise Kubernetes cluster. It is part of a series of three:

- Architecture of object-based storage and the S3 standard

- MinIO object storage within a Kubernetes cluster

- Ceph object storage within a Kubernetes cluster with Rook

In this article series, the third article gives indications on how to host object storage through Ceph with the Rook operator. MinIO is simple to install and use. It provides object storage exclusively. In comparison, Ceph is much more modular. In addition to object storage, it also provides file system and block storage.

What is MinIO?

MinIO is an easy-to-deploy open-source object storage solution. It is Kubernetes-native. It runs on a Kubernetes cluster of bare-metal hardware or virtual machines.

Minio is commonly deployed inside Kubernetes. While it is possible to install it on your host machine, it is not common and we do not cover this deployment mode.

For early testing and development purposes, it is possible to host a standalone MinIO Server in a single container which mounts directories instead. This server is one single instance of what the MinIO Kubernetes orchestrator deploys at cluster scale.

MinIO has two components:

- MinIO Server, the central core of the object storage and handler of the distributed data storage and related services;

- MinIO Client, a built-in S3 command-line tool to access and test the storage, also compatible with other S3 storage.

Being Kubernetes-native means MinIO leverages Kubernetes Persistent Volumes and Persistent Volume Claims as a means to store data.

MinIO complies with the usual object storage architecture, where data is logically divided into buckets for data isolation. Minio is deployed in a Kubernetes cluster with the MinIO Operator. The MinIO Operator supports multi-tenancy. Tenants are a storage layer above buckets. Tenants are groups of users with their own sets of buckets and their own pods handling storage.

In the same way that AWS SDKs are provided for multiple programming languages, MinIO offers its own set of SDKs. Using the Minio SDK simplifies access to the local MinIO object storage. It removes the configuration and dependencies complexity associated with the usage of the AWS SDKs.

Object storage with MinIO in a Kubernetes cluster

As stated previously, MinIO can be deployed as a standalone container server for early testing. More advanced MinIO setups include bare-metal installs using the main repository’s resources, and a separate repository is dedicated to the MinIO Kubernetes Operator for cluster deployment. The latter allows us to easily obtain a distributed MinIO instance fit for both development and production scenarios, being quick to deploy and generally convenient for this tutorial. The operator automates deployment in the cluster and handles complex configurations for us by default, such as certificate management.

This tutorial requires:

- A Kubernetes cluster with a Container Network Interface (CNI) plugin installed (for example, Calico);

- At least 3 worker nodes with 3 GB of RAM;

- Available unformatted disks on your worker nodes or unformatted partitions within those disks. Those must either be physical devices if on bare-metal, or virtual disks if using VMs.

This repository provides a template to easily obtain a cluster fulfilling this tutorial’s requirements.

If you wish to learn how to add virtual disks in a Vagrant cluster using VirtualBox, take a look at this article.

Note: For a production cluster, separating worker nodes from object storage nodes is a good practice, as computing operations and storage operations do not affect each other as a result. This is known as asymmetrical storage.

Before installing

First, create the MinIO Tenant namespace. Tenants are MinIO object storage consumers. Each namespace holds one MinIO tenant at most.

kubectl create namespace minio-tenant-1Then, install Krew. Krew is a kubectl plugin installer, which allows us to install necessary MinIO components. It is installed by running the following commands:

# Install Krew

(

set -x; cd "$(mktemp -d)" &&

OS="$(uname | tr '[:upper:]' '[:lower:]')" &&

ARCH="$(uname -m | sed -e 's/x86_64/amd64/' -e 's/\(arm\)\(64\)\?.*/\1\2/' -e 's/aarch64$/arm64/')" &&

KREW="krew-${OS}_${ARCH}" &&

curl -fsSLO "https://github.com/kubernetes-sigs/krew/releases/latest/download/${KREW}.tar.gz" &&

tar zxvf "${KREW}.tar.gz" &&

./"${KREW}" install krew

)

# Add Krew to PATH

export PATH="${KREW_ROOT:-$HOME/.krew}/bin:$PATH"

# Verify that Krew's install is successful by updating the plugin index

kubectl krew updatePreparing drives for MinIO with DirectPV

Before initializing the MinIO Operator, one requirement for MinIO must be filled. As previously noted, MinIO uses Kubernetes PVs, which must be somehow provided in order for MinIO to create its dedicated object storage PVCs. The MinIO DirectPV driver automates this process by discovering, formatting and mounting the cluster drives.

Install the DirectPV plugin with Krew, and then install the driver itself:

kubectl krew install directpv

kubectl directpv installIn this tutorial, the drives are on the worker nodes. However, in a real production cluster using object storage, it is a good practice to separate your worker nodes from your dedicated storage nodes. This is achieved by applying taints on those storage nodes. Then, it is possible to specify taint tolerations as options when installing DirectPV.

After waiting for DirectPV to initialize, check the detected disks. Among the results, the dedicated storage disks on your nodes appears. If you also wish to see Unavailable disks, use the --all option:

kubectl directpv drives ls

# DRIVE CAPACITY ALLOCATED FILESYSTEM VOLUMES NODE ACCESS-TIER STATUS

# /dev/sda 20 GiB - - - demo-worker-01 - Available

# /dev/sdb 20 GiB - - - demo-worker-01 - Available

# /dev/sda 20 GiB - - - demo-worker-02 - Available

# /dev/sdb 20 GiB - - - demo-worker-02 - Available

# /dev/sda 20 GiB - - - demo-worker-03 - Available

# /dev/sdb 20 GiB - - - demo-worker-03 - AvailableThe disks without a given filesystem, available for object storage, are displayed in this list.

Now, select the drives to be formatted and managed by DirectPV. Here, use of the sd{a..f} operator selects all drives from sda to sdf for the nodes named demo-worker-0{1...3}, going from 1 through 3:

# Modify the option values as necessary for your Kubernetes setup

kubectl directpv drives format --drives /dev/sd{a...f} --nodes demo-worker-0{1...3}# Check for successful formatting

kubectl directpv drives ls

# DRIVE CAPACITY ALLOCATED FILESYSTEM VOLUMES NODE ACCESS-TIER STATUS

# /dev/sda 20 GiB - xfs - demo-worker-01 - Ready

# /dev/sdb 20 GiB - xfs - demo-worker-01 - Ready

# /dev/sda 20 GiB - xfs - demo-worker-02 - Ready

# /dev/sdb 20 GiB - xfs - demo-worker-02 - Ready

# /dev/sda 20 GiB - xfs - demo-worker-03 - Ready

# /dev/sdb 20 GiB - xfs - demo-worker-03 - ReadyThe cluster drives are now configured to be automatically used for PVs and PVCs with MinIO.

Installing MinIO Operator

There are two ways to install the MinIO Operator:

- Through Krew using the

minioplugin; - Through Helm using the MinIO Operator Helm Chart, allowing us to automatically configure the MinIO Operator and MinIO Tenant from

yamlvalues files.

Both are valuable. Final results of each installation are identical.

Krew is already installed, we used it previously for DirectPV’s installation. It is a convenient way to learn how MinIO works. The process is decomposed into multiple commands.

Helm also installs the MinIO Operator. Only two commands are necessary to deploy the Minio Operetor and the initial Minio Tenant. Following the Helm philosophy, it provides declarative configuration and automation.

MinIO Operator with Krew

Proceed to install the minio Krew plugin and initialize the MinIO Operator:

kubectl krew install minio

kubectl minio initWait until the operator pods are running, check with kubectl get pods -n minio-operator.

With the Operator running, a Tenant must now be created. This is either done through the MinIO console as shown here, or through the command-line. The MinIO console is accessed by temporarily forwarding ports from the host machine to the console pod. Open a separate terminal window connected to the master node and run the following command, which does not return:

kubectl minio proxy -n minio-operator

# Starting port forward of the Console UI.

# To connect open a browser and go to http://localhost:9090

# Current JWT to login: <JTW_TOKEN>Open your web browser. The address used to connect to the console changes if:

- there is no Ingress controller in your cluster, use your cluster control-plane IP instead of

localhost, for example:http://192.168.56.10:9090for the cluster provided in prerequisites; - an Ingress controller exists in your cluster, where using this proxy is not possible without further setup. A better way to handle Ingresses with MinIO is by alternatively installing the MinIO Operator with the Helm Chart and enabling Ingress in the values files.

Once accessing the operator console, use the provided JWT token to authenticate.

If faced with a blank page after the JWT token prompt, the browser cannot trust the Certificate Authority. Changing web browsers helps solve the issue. For further help, follow instructions here.

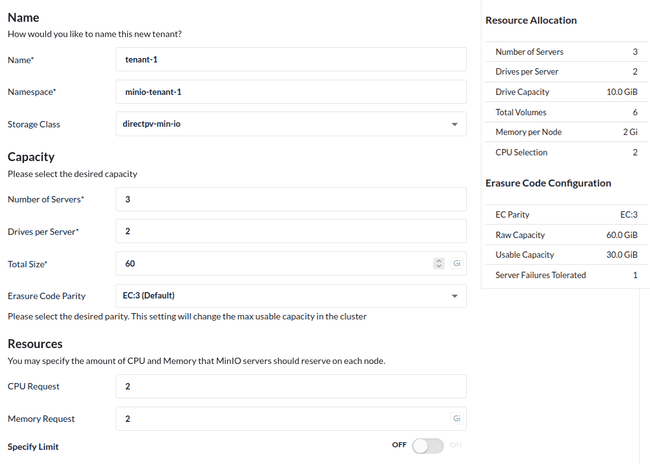

After authenticating, click the Create Tenant button on the top right. A setup page opens.

The fields are documented on the MinIO tenant setup. For this S3 object storage test, fill the fields as follows, with the Total Size field set to a value within the total capacity of your formatted node disks:

- Name: tenant-1;

- Namespace: minio-tenant-1;

- Storage Class: directpv-min-io;

- Number of Servers: 3;

- Drives per Server: 2;

- Total Size: 60 Gi.

It is also helpful to disable TLS in the Security tab during this tutorial: this allows us to connect using the MinIO Client later on without certificates.

Upon creation, you are provided with S3 credentials. Note them down or save them on your computer in a temporary file for later use during testing.

MinIO then attempts to deploy the tenant pods. Checking their status is either done in the Pods tab of the operator console, or with kubectl get pods -n minio-tenant-1. The console displays a green dot next to the tenant status when object storage is ready.

If those pods fail to create, the operator console provides convenient investigation tools. The

Eventstab indicates all events in the tenant namespace. In thePodstab, clicking one pod grants access to the podLogstab.

MinIO Operator with the Helm chart

This part covers steps for an alternate, more advanced install. Skip to testing the object storage instance if this other install is not necessary in your case.

Krew follows a step-by-step approach to install MinIO and create a tenant. Declarative configuration and automation comes as the next step instead of manually accessing the operator console. This is where the MinIO Operator Helm Chart and the MinIO Tenant Helm Chart shine.

Helm manages the deployment of Kubernetes applications and their configuration. Those deployments, called Charts, are often given YAML configuration files. Using those manifests for the configuration allows for more flexible, declarative setups.

To get started with this install, first install Helm:

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

sudo chmod 0700 get_helm.sh

./get_helm.shThen, download the operator values file and, optionally, the tenant values file.

curl -fsSL -o operator-values.yaml https://raw.githubusercontent.com/minio/operator/master/helm/operator/values.yaml

curl -fsSL -o tenant-values.yaml https://raw.githubusercontent.com/minio/operator/master/helm/tenant/values.yamlIn this case, the MinIO operator and tenant are configured in their respective values files. Starting with the provided default values files is recommended. Change values in the manifests as indicated in the above list with an editor, for example nano operator-values.yaml and nano tenant-values.yaml.

The most important fields for the MinIO configuration lie in the tenant values:

tenant.pools.serversallows us to control the number of pods hosting MinIO servers instances. As this tutorial assumes 3 nodes, the appropriate value is3;tenant.pools.volumesPerServerneeds to match the amount of drives per node. The final amount of object storage volumes is the volumes per server times the amount of servers. Note that additional, separate volumes appear when logging or metrics features are enabled;tenant.pools.storageClassNameneeds to be set todirectpv-min-ioto let MinIO use DirectPV’s volumes;- Setting

tenant.certificate.requestAutoCerttofalsedisables TLS for MinIO for this tutorial, allowing the MinIO Client to connect without certificates to object storage.

The two following fields are optional:

tenant.pools.tolerationsenables MinIO to bypass taints when using dedicated storage nodes;tenant.ingress.api.enabledandtenant.ingress.console.enabledboth need to be set totrueif you are using an Ingress controller within the cluster. Set theingressClassNamedepending on the Ingress controller type, and fill thehostfields using domain names likeminio.mydomain.comandminio-console.mydomain.comrespectively.

The operator values are more straightforward: only the Ingress field and toleration fields, when required, need to be set.

Note, installing the tenant through Helm is not mandatory. It can also be created manually through the operator web console as covered in the Krew installation part. However, the Helm install bundles everything together, including Ingress creation associated with all MinIO services.

Once the desired configuration is set in the values files, install the operator and the tenant charts:

helm repo add minio https://operator.min.io/

helm upgrade --install --namespace minio-operator --create-namespace minio-operator minio/operator --values operator-values.yaml

helm upgrade --install --namespace minio-tenant-1 --create-namespace minio-tenant-1 minio/tenant --values tenant-values.yamlTemporary access to the MinIO operator console from the host machine is done through the following command, from another terminal:

kubectl port-forward --address 0.0.0.0 -n minio-operator service/console 9090:9090Navigate to the control plane address on port 9090 on a web browser to access the console. On the provided demo cluster, the correct address is http://192.168.56.10:9090. The JWT to authenticate is obtained with the following command:

kubectl -n minio-operator get secret console-sa-secret -o jsonpath="{.data.token}" | base64 --decodeTesting the object storage

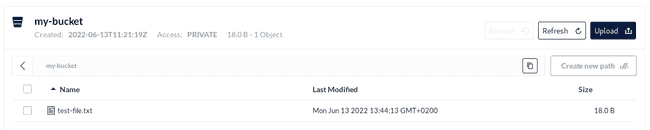

With the tenant running in a healthy state (indicated by a green dot in the operator console), it is possible to directly test our S3 object storage instance from the operator console. From the list of tenants, click on the tenant and click on the Console button on the top right, redirecting you to the Tenant management console.

This tenant being empty at first, start by creating a bucket, e.g my-bucket. Upload an example file using the Upload button on the top right. Once uploaded, the file appears as follows:

Clicking on the file allows you to download it back, ensuring that object storage hosting was successful!

Access to this bucket is granted freely from the operator console, but is otherwise granted through the S3 credentials generated when creating the tenant.

Using the MinIO Client to access object storage

The MinIO Server providing object storage is now established, and accessing it without going through the MinIO console on the web is done either programmatically with SDKs such as the MinIO JavaScript SDK on npm, or through S3 CLI clients such as the MinIO Client, mc.

Authorized actions for object storage users are defined as policies, as MinIO uses Policy-Based Access Control (PBAC). Those policies range from readonly to consoleAdmin, and are set either from the MinIO console or using the MinIO Client’s mc admin policy command.

Install mc:

wget https://dl.min.io/client/mc/release/linux-amd64/mc

chmod +x mcThen:

- Fetch the S3 access key and secret key They are required in order to connect to any S3 storage. In the Krew install, those were previously saved. Otherwise, creating new credentials is explained below;

- An S3 endpoint is required. Setups with Ingresses and load-balancers display this endpoint in the

Summarytab of the tenant. Otherwise, the endpoint needs to be port-forwarded to be accessed on the VM usingmc. Since port-forwarding does not return, run this command in another terminal window in the master node:

kubectl port-forward -n minio-tenant-1 service/minio 8080:80The resulting endpoint is http://127.0.0.1:8080.

If you wish to use different credentials, or if the tenant creation did not generate them, it is possible to create new S3 access and secret keys from the tenant console. To do so:

- Go to the tenant in the operator console, access the tenant’s console using the top right

Consolebutton, expand theIdentitytab and navigate toUsers; - Click on

Create Userand provide both name and password which functions as an access/secret key pair; - Enable the

readwritepolicy for this user.

The following command sets the S3 configuration as an alias in mc:

./mc alias set my-minio-server <MINIO-ENDPOINT> <ACCESS-KEY> <SECRET-KEY>mc provides different commands to perform common file actions such as ls, cp, mv, and also has specific features such as creating buckets or running SQL queries against objects. To get a full list of those commands, run ./mc -h.

Putting a file in MinIO or any S3 object storage is done through the cp command found in most S3 clients. Let’s create a test bucket, put an example text file in S3 storage and retrieve it as a simple way to illustrate its usage:

echo Hello, S3 Storage > /tmp/test-file.txt

./mc mb my-minio-server/test-bucket

./mc cp /tmp/test-file.txt my-minio-server/test-bucket

./mc cp my-minio-server/test-bucket/test-file.txt /tmp/test-file-from-s3.txt

cat /tmp/test-file-from-s3.txt

# Hello, S3 StorageThe

mc cpcommand supports globs.

Note that test-file.txt now exists in the object storage and is accessible from the MinIO console, in the new bucket.

Dedicated nodes for storage

In the setup provided here, worker nodes also handle storage devices, but it is possible to use Kubernetes Taints and tolerations to use dedicated storage nodes. Taints prevent regular jobs from running on those low-spec nodes, and MinIO is given tolerations to use the nodes for data storage.

If this setup is desired, applying those tolerations is required for DirectPV install:

kubectl directpv install --node-selector 'storage-node=true' --tolerations 'storage-node=true:NoSchedule'Tolerations also need to be given when creating a MinIO tenant, under the Pod Placement tab if using the Operator console or as tolerations in the operator manifest if using the Helm charts.

Why MinIO over Ceph?

Ceph is another storage solution, providing object storage as one of its three storage modes along with file systems and block storage. We cover its components and installation steps in the following article in the series. Here are key indications comparing both:

Pros:

- MinIO is simpler to deploy in a cluster than standalone Ceph, although Rook greatly helps in simplifying this process;

- MinIO has more tools to make S3 object storage consumption easy, with dedicated MinIO SDKs and a MinIO Client compatible with any object storage solution.

Cons:

- Documentation-wise, MinIO’s is not as clear as Ceph’s or Rook’s, with missing details and possible confusion;

- The MinIO operator console is not perfect and suffers from some bugs.

Conclusion

Object storage is a fit data architecture for Big Data scenarios, and deploying a local object storage solution allows us to understand how it works and how to leverage it in applications processing this data.

MinIO offers the benefits of being deployed quickly and configured with relative ease for a basic setup while still allowing for advanced configuration for production scenarios or integration with other services. As an S3-compliant object storage solution, it is compatible with any S3 client.

While we covered the usage of the MinIO Client mc to access object storage, usage of other S3 CLI clients does not differ greatly. As for use in programs, AWS SDKs or MinIO SDKs require minimal configuration to work with your on-prem object storage.

More complex configuration steps for object storage with MinIO include data replication across buckets. Steps on how to achieve it are given in the official documentation.